This guide will take you through the process of developing, deploying, and monitoring machine learning models within your Snowflake environment using DataRobot and Snowpark.

The use case for this lab is a fraud detection use case, and we will perform supervised machine learning by building a binary classification model to help predict whether a transaction is likely to be fraud.

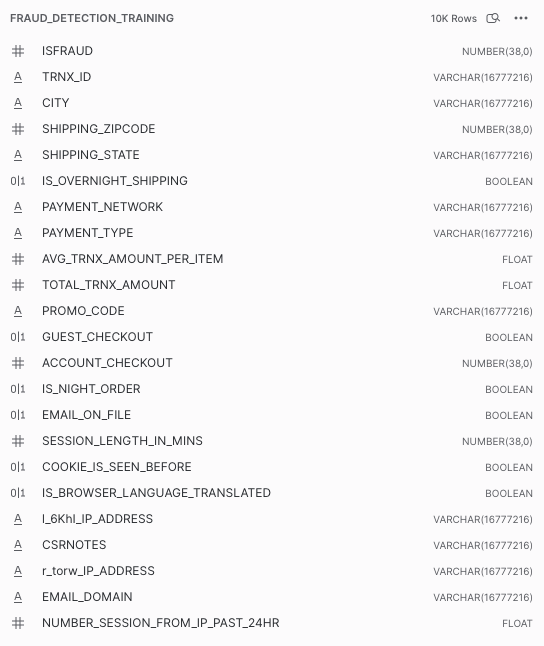

The historical dataset we will be working with today contains details about the transaction. Here is a description of the features:

Prerequisites

- Basic knowledge of SQL, and database concepts and objects

- Basic understanding of Snowpark and Python

- Basic understanding of data science and machine learning

What You'll Learn

- Utilizing Snowpark and DataRobot-hosted Notebooks for feature engineering on your Snowflake data

- Applying DataRobot Machine Learning to build, analyze model performance, and transform it into actionable insights.

- Basic understanding of data science and machine learning

- Using DataRobot AI Production capabilities and Snowpark to simplify the deployment and monitoring processes within the Snowflake environment

What You'll Need

- A Snowflake Account (if you are using an enterprise account through your organization, it is unlikely that you will have the privileges to use the

ACCOUNTADMINrole, which is required for this lab). Be advised to configure it to be ‘Enterprise' and on AWS. - Download the following notebook that we will work on most of the lab

Download the notebook

What You'll Build

- Snowflake objects, including tables and warehouses, to scale and manage your data

- Acquire a training dataset from a Snowflake table using Snowpark for Python

- Feature engineering: analyze data and create new features using Snowpark

- Build a new DataRobot project with over 50+ machine learning models automatically to measure their performance and interpret

- Analyze and evaluate model performance and explainability using DataRobot predictive experimentation

- Deploy the recommended model to Snowflake using DataRobot AI Production

- Score the model via Snowpark for Java

- Monitor the model with DataRobot AI Production

The first thing you will need to do is download the following .sql file that contains a series of SQL commands we will execute throughout this lab.

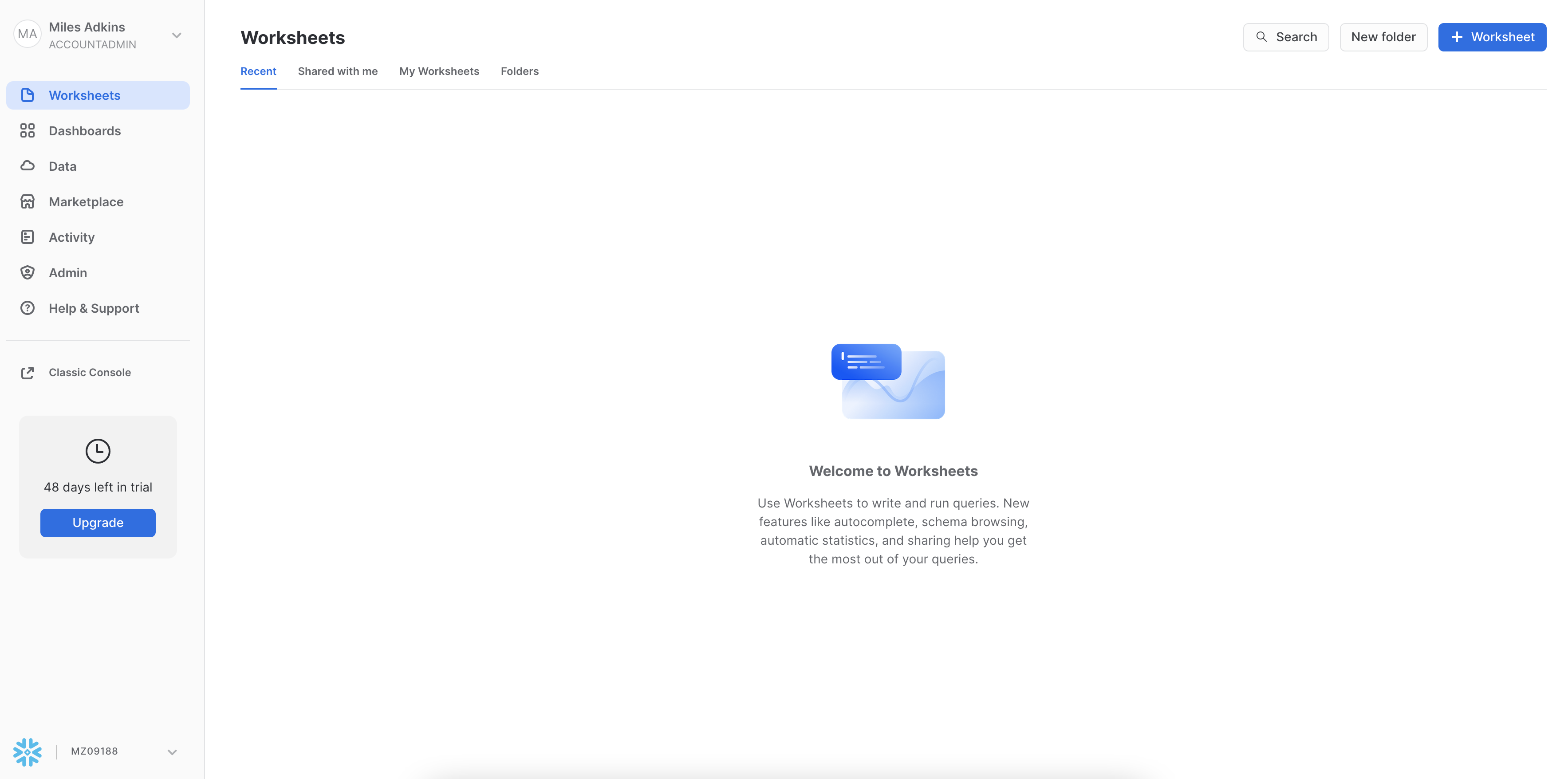

At this point, log into your Snowflake account and open a new Worksheet. If you have just created a free trial account, you will land in the Learn section. Simply navigate to the Worksheets tab on the left and click + Worksheet in the top right hand corner.

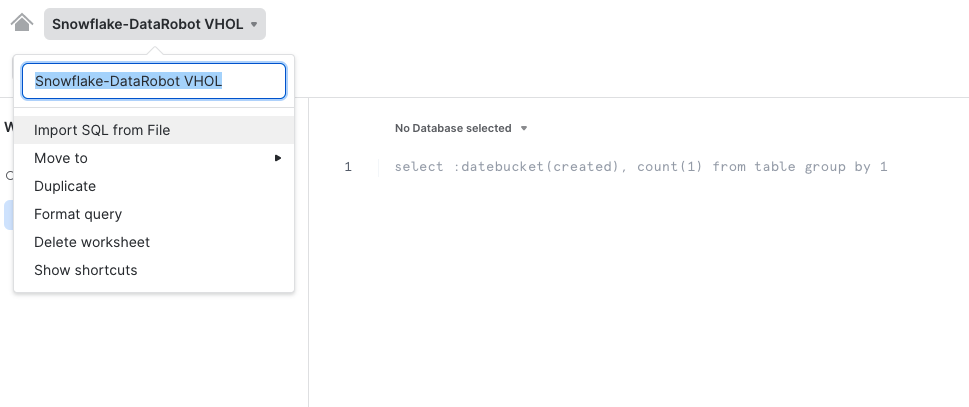

To ingest our script in the Snowflake UI, click the down arrow next to the time your notebook was created in the top left hand side of your screen and load our Snowflake_Datarobot_HOL.sql script using the Import SQL from File button. You can also change the name of this worksheet to "Snowflake-DataRobot VHOL"

Snowflake provides "worksheets" as the spot for you to execute your code. For each worksheet you create, you will need to set the "context" so the worksheet knows how to behave. A "context" in Snowflake is made up of 4 distinctions that must be set before we can perform any work: the "role" we want to act as, the "database" and "schema" we want to work with, and the "warehouse" we want to perform the work.

Lets go ahead and set the role we want to act as, which will be ACCOUNTADMIN to begin with. This can either be done manually in the UI or programmatically in a worksheet (ACCOUNTADMIN is the default role for a first time user). Lets do so programmatically in our worksheet by executing our first line of code:

USE ROLE accountadmin;

To execute this code, all we need to do is place our cursor on the line we wish to run and then either hit the "run" button at the top left of the worksheet or press Cmd/Ctrl + Enter.

In addition to traditional SQL statements, Snowflake Data Definition (DDL) commands, such as setting the worksheet context, we will also execute the following code within the worksheet. The "role": ACCOUNTADMIN, we already set earlier. To set our "database", "schema", and "warehouse", execute the following code:

USE DATABASE SANDBOX;

USE SCHEMA public;

USE WAREHOUSE DEMO_WH;

Each step throughout the guide has an associated SQL command to perform the work we are looking to execute, and so feel free to step through each action running the code line by line as we walk through the lab.

As part of the lab, we are going to create 2 tables:

TRAIN_DATA– The dataset that we will use to train our machine learning modelSCORING_DATA– Out of sample data that we will use to score and validate our model

(We will create both both through DataRobot Notebooks)

At this point in time, we have our data sitting in an optimized table within Snowflake that is available for a variety of different downstream functions. Snowflake does not offer machine learning capabilities, and therefore, happily partners with the leading data science and machine learning partners in the industry. We are on a mission to help us figure out which transactions are most likely to be fraud and DataRobot can help us build a machine learning model to answer that question.

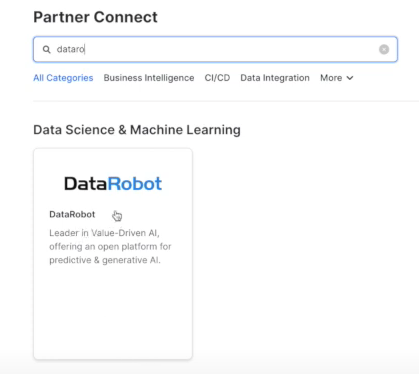

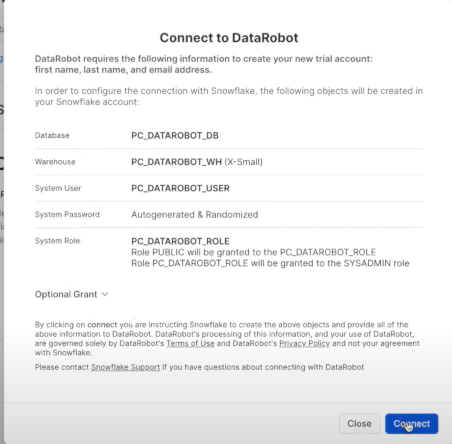

Snowflake's Partner Connect feature allows you to seamlessly get started with partner tools and manages most of the connection details for you to get up and running as quickly as possible. To get here, click our "Home" button and then navigate to "Admin" and then "Partner Connect". This should take you to the following screen where you will see many of the Snowflake partners, and through a simple method of setting up an account and integration, allow you to quickly move data into a partner tool. Click the "Data Science & ML" category and click "DataRobot".

We have all the Snowflake objects we need created already, so press "Connect".

And activate our account, so press "Activate".

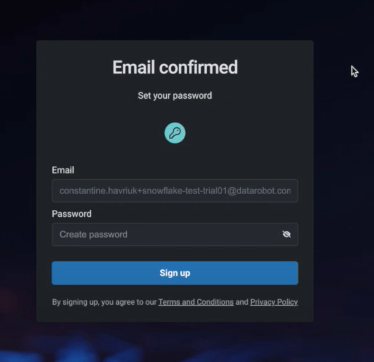

Go ahead and set up a password, and then click "Sign up" for us to proceed.

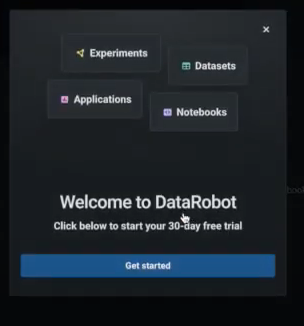

DataRobot is software-as-a-service (SaaS) application deployed on the cloud for your convenience, provides all the tools you need to confidently build, deploy, manage, and govern both generative and predictive AI solutions for enterprises.

Click "Get started" for us to proceed.

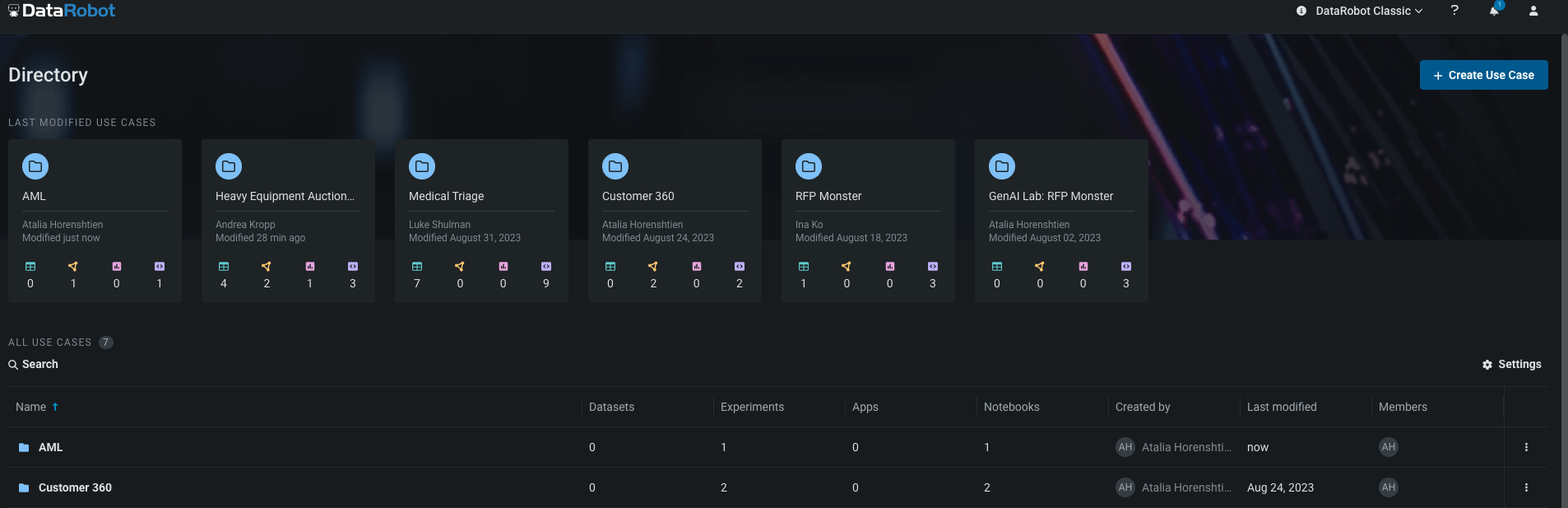

This is Workbench, and is the next user interface and a logical update from DataRobot Classic. All new features will be built in the new Workbench.

DataRobot provides easy access to all the necessary resources. Personal and shared projects are displayed, acting as a centralized folder for organized AI assets. It eliminates the need for manual folder management and allows quick access to datasets, experiments, apps, and notebooks. The organized approach facilitates tracking, management, asset consolidation, collaboration, and efficiency for individuals and teams.

Let's create our Fraud Detection use case: On the top right click ‘+ Create Use Case' and name it ‘Fraud Detection'

Interacting with DataRobot is flexible. You can choose between a low-code option or code-first options.

In the code-first option, you have the freedom to use DataRobot as an API call within your preferred notebook, similar to any modern data science library.

Alternatively, you can utilize DataRobot's fully managed and hosted Notebooks to keep everything organized across teams, which is what we are going to do today.

Now we can bring our Notebook: click on the ‘Notebooks' tab and import the notebook from the prerequisites

These notebooks support Python and R, letting data scientists easily run code with all essential tools on the platform. They offer built-in revision history for simple checkpointing and version reverting.

DataRobot's notebooks negate the need for adjusting compute resources and images, allowing focus on work with one click, devoid of handling basic infrastructure tasks. Additionally, they provide common code snippets for time-saving and focus on creative experimentation.

Integration with OpenAI offers superior Generative AI assistance, automated code generation, enhanced datasets, insights, and optimization, boosting productivity and performance.

Before running the notebook or executing any cells, ensure the following environment variable:

- Make sure the environment is Python 3.8

- Configure the following environmental variables (with your own Snowflake credentials):

- ‘snowflake_user': Snowflake user name

- ‘snowflake_password': Snowflake password

- ‘snowflake_account': Snowflake account name (You can find it in the confirmation email from Snowflake - from the Dedicated Login URL: https://abcd-ab03384.snowflakecomputing.com - ‘abcd-ab03384' is the account name)

Most of this workflow is executed by the notebook, but you will create a deployment and monitor it via the DataRobot GUI. Supporting documentation for these steps are included in the notebook.

Let's move to run cells and the instructions in the notebook. We will come back to the guide before moving to the ‘Model Deployment' stage.

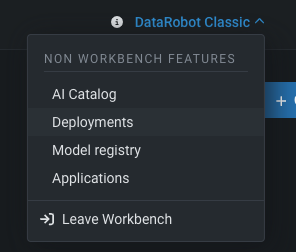

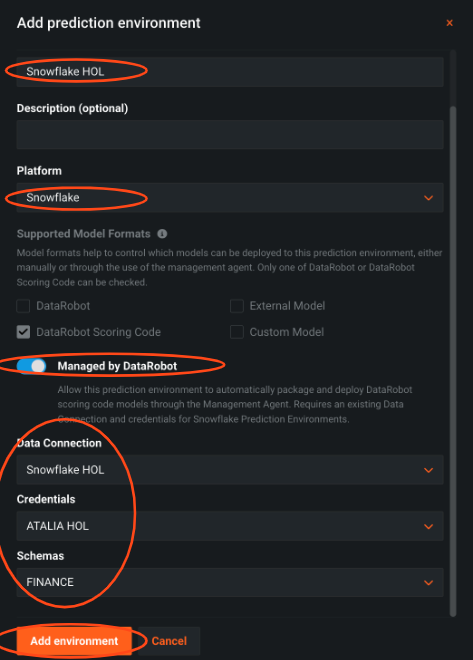

First, we need to change to ‘DataRobot Classic'. ‘Deployments' and our AI production capabilities are in ‘Classic' and will be part of the new experience 11/7, and all functionality available in Classic will eventually migrate into the new experience.

Because we would like to deploy a model into the ‘Finance' schema we need to create a new data connection.

Click on the top right icon » ‘Data connections' » Put your credentials + Add parameter - the schema ‘FINANCE'

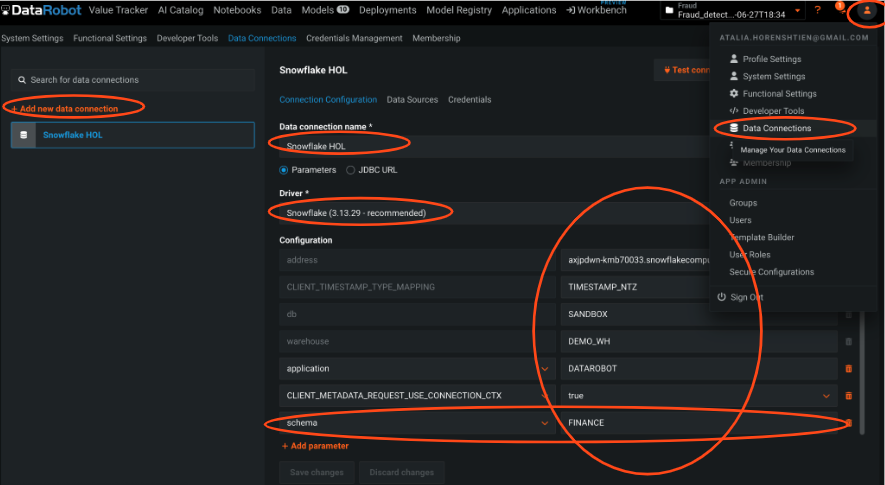

Because we are going to deploy the model to Snowflake, we need to set up a Snowflake prediction environment.

This means that DataRobot will completely manage and automatically control this prediction environment, including model deployment and replacement.

Press on the ‘Deployment' Tab » ‘Prediction Environments' tab »Add prediction environment

- Create prediction environment

- Choose the Snowflake data connection you have

- Press add environment

To keep a version of the model we are going to deploy, we will add it to the registry.

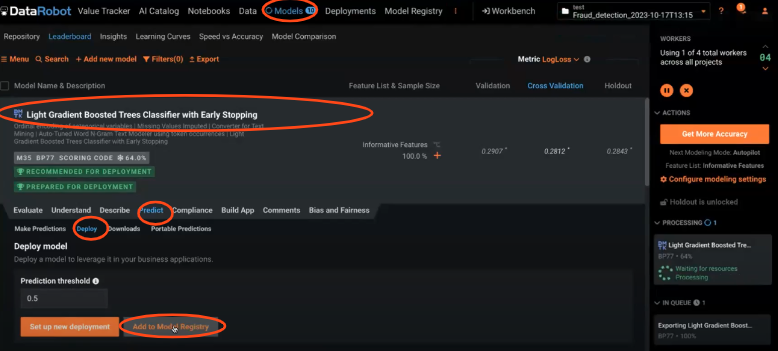

Press on the ‘Models' tab » press on the desired model » press on ‘Predict' tab » press on ‘Deploy' tab » click ‘Add to Model Registry'.

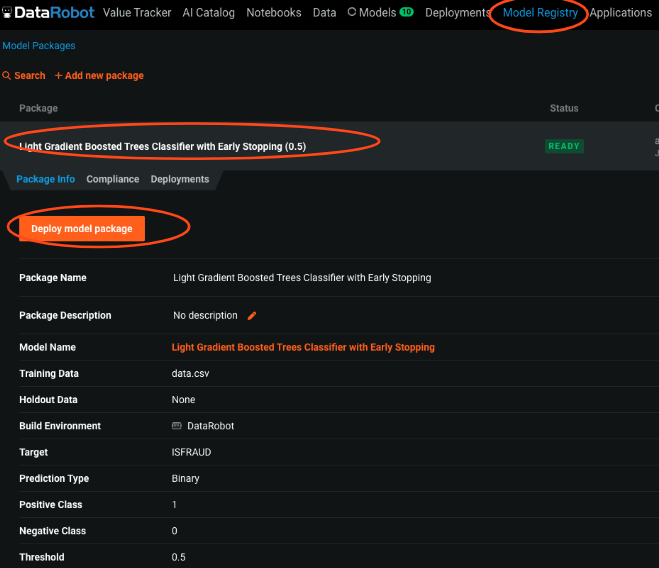

Press on the ‘Model registry' tab » press on ‘Model Packages' tab » press on the desired model » ‘Deploy model package'.

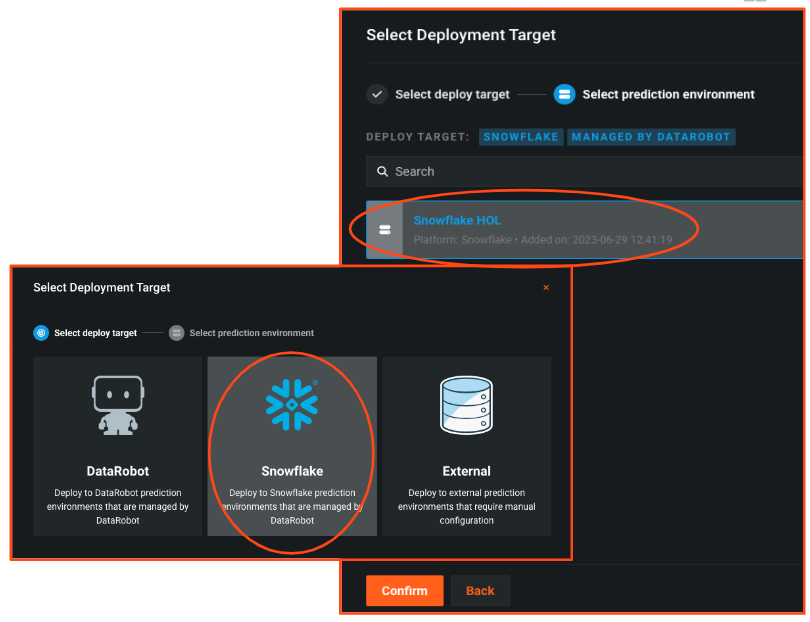

You can pick where to deploy it, here we choose Snowflake and choosing the prediction environment we set earlier.

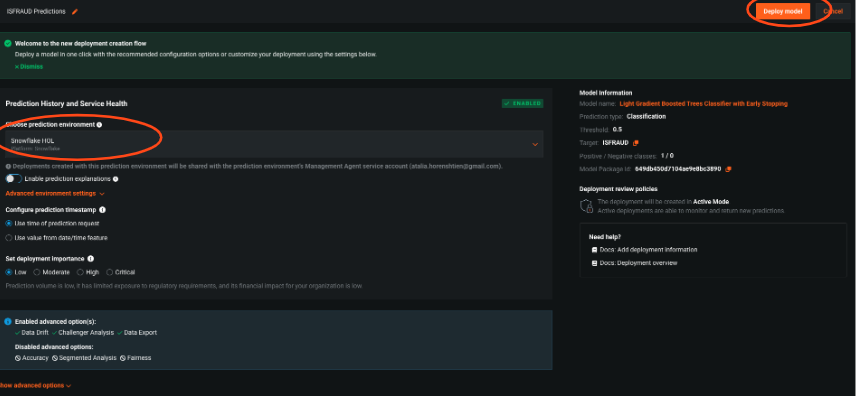

We are ready to deploy our model to Snowflake. Create the deployment by clicking ‘Deploy model'. The JAVA UDF will be pushed automatically to your Snowflake environment.

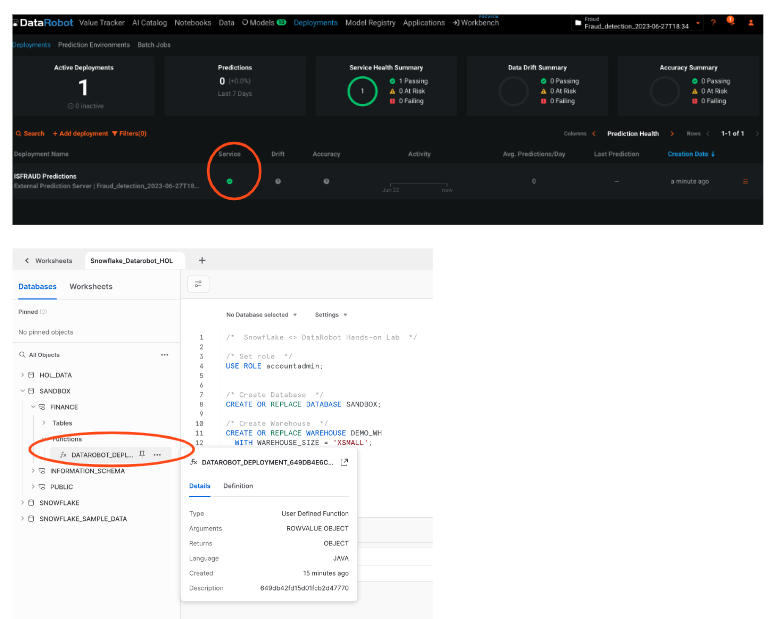

To ensure the deployment process was successful, you can go to the ‘Deployment' tab and look for the deployment. We can see that the management agent is now running, and we have a health indicator in the GUI.

To ensure the UDF was pushed you can check if the UDF appears in your snowflake account, under your schema.

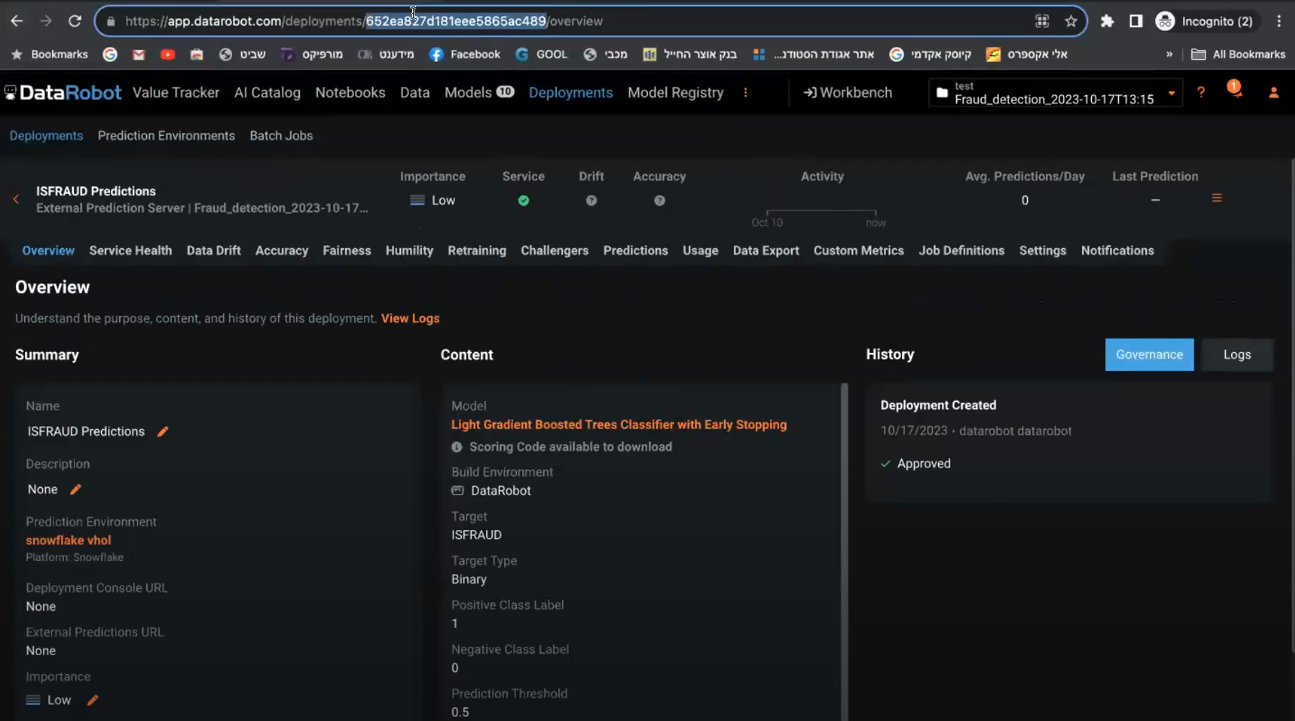

Please copy the deployment id from the URL, we will use it with the notebook.

Now we can go back to the notebook to score our prediction data with Snowpark, see the results and come back here in the ‘Model monitoring' section

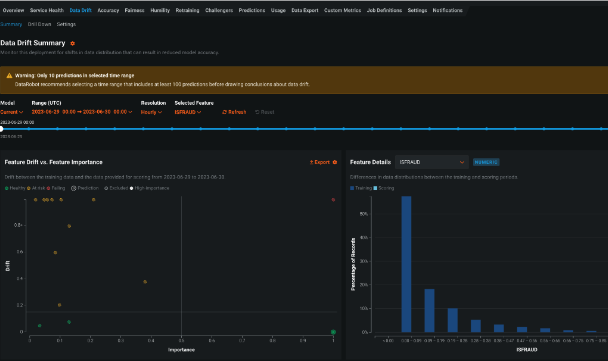

To make sure business decisions are aligned with external and internal factors, you need to monitor the model performance and understand if you need to replace or retrain it.

DataRobot provides the flexibility to do that with models that are deployed in platforms outside of DataRobot, such as Snowflake.

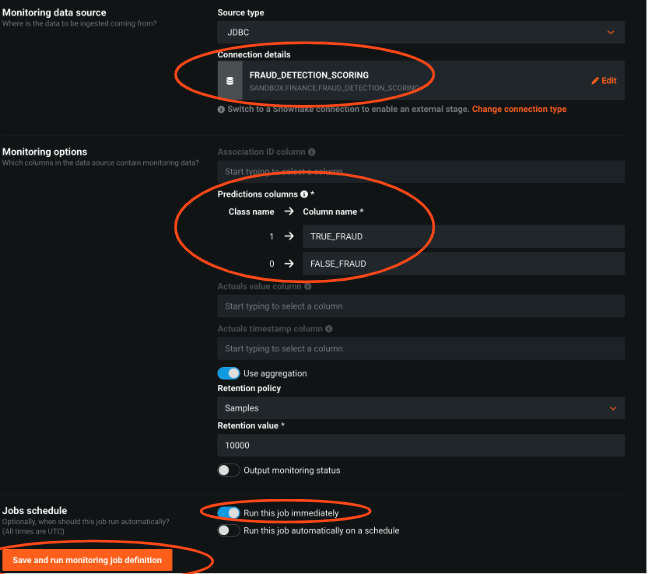

Let's define a monitoring job to read past predictions and/or actuals from a database table.

Press on the ‘Deployment' Tab » Choose the deployed model » Press on Job Definitions tab » Add job definition

- Configure the table and columns to track - we choose the predictions columns ‘TRUE_FRAUD' and ‘FALSE_FRAUD'.Optional: track actuals for model accuracy

- Decide job schedule - you can decide if this job will run immediately or on a scheduled basis. This decision is based on your business rules and how often there is new data. For this lab, let's choose immediately

Press ‘Save and run monitoring job definition'

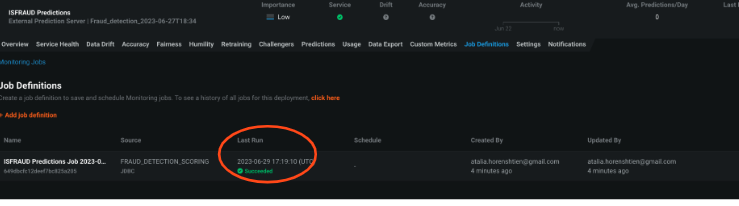

We see that the job is being executed and right after that the job has been completed

DataRobot will monitor the predictions and the actual values and organize them over time in our Monitoring Engine to track data drift and accuracy for the model.

These out-of-the-box graphs will change over time and can help determine the model's accuracy.

Together with Snowflake and DataRobot you can:

- Empower Your Data Capabilities: By merging the power of Snowpark and DataRobot-hosted Notebooks, enhance your feature engineering capabilities on Snowflake data. Dive deep into the world of data science and machine learning to transform raw data into actionable insights seamlessly.

- Experience Comprehensive Building Tools: Elevate your data management skills by constructing vital Snowflake objects and experience automated machine learning, ensuring optimal performance and understanding.

- Efficient Deployment & Seamless Monitoring: Simplify and streamline your deployment journey in the Snowflake environment. With the amalgamation of DataRobot AI Production capabilities and Snowpark, ensure not just the deployment but also the scoring and proactive monitoring of your models, ensuring maximum uptime and efficiency.

For more, check our partnership page