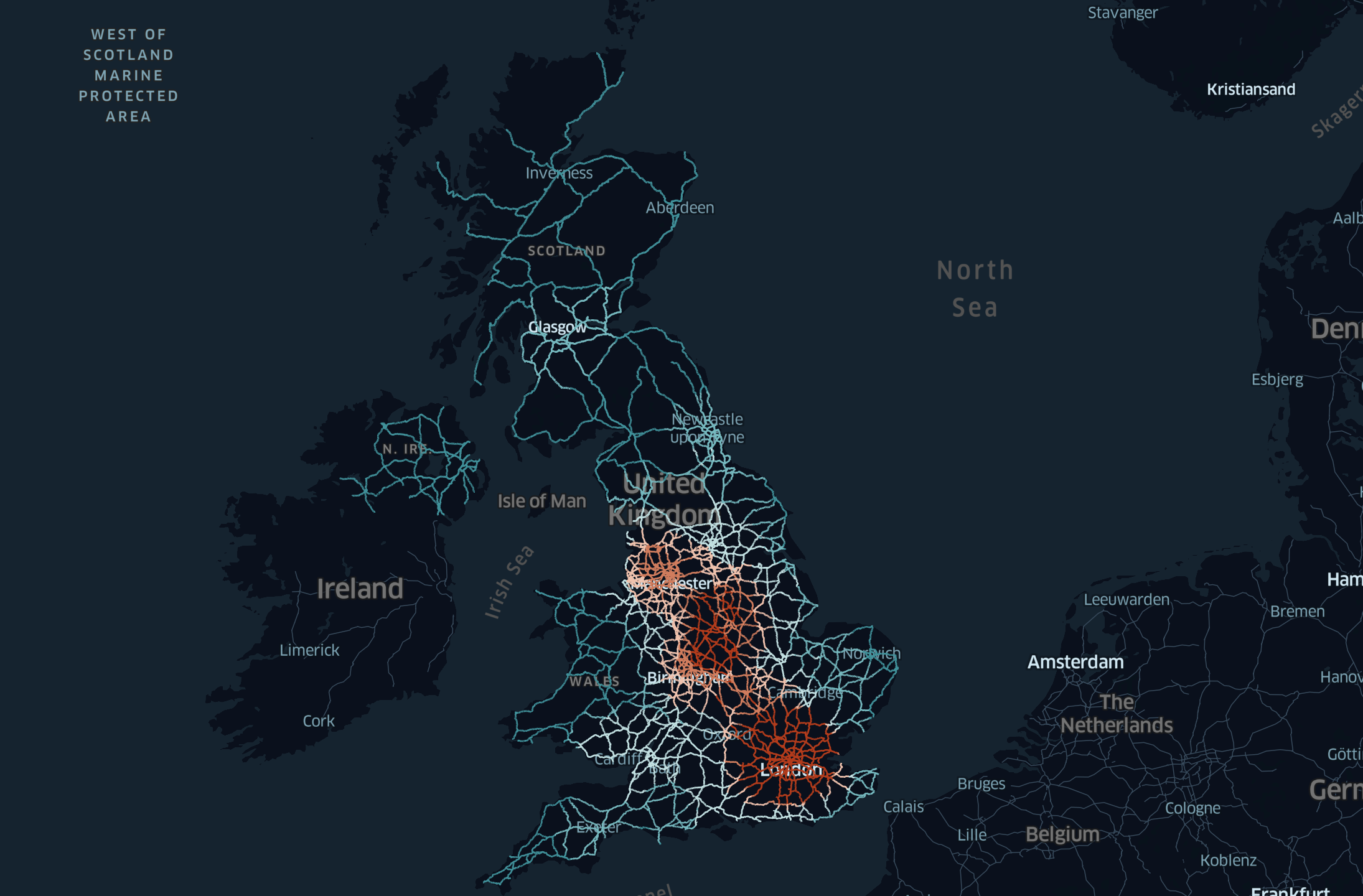

Snowflake offers a rich toolkit for predictive analytics with a geospatial component. It includes two data types and specialized functions for transformation, prediction, and visualization. This guide is divided into multiple labs, each covering a separate use case that showcases different features for a real-world scenario.

Prerequisites

- Understanding of Discrete Global Grid H3

- Recommend: Understanding of Geospatial Data Types and Geospatial Functions in Snowflake

- Recommended: Complete Geospatial Analysis using Geometry and Geography Data Types quickstart

- Recommended: Complete Performance Optimization Techniques for Geospatial queries quickstart

What You'll Learn

In this quickstart, you will use H3, Time Series, Cortex ML and Streamlit for ML use cases. The quickstart is broken up into separate labs:

- Lab 1: Geospatial 101

- Lab 2: Energy grids analysis using GEOMETRY

- Lab 3: Geocoding and Reverse Geocoding

- Lab 4: Forecasting time series on a map

- Lab 5: Sentiment analysis of customer reviews

- Lab 6: Processing unstructured geospatial data

- Lab 7: Creating Interactive Maps with Kepler.gl

When you complete this quickstart, you will have gained practical experience in several areas:

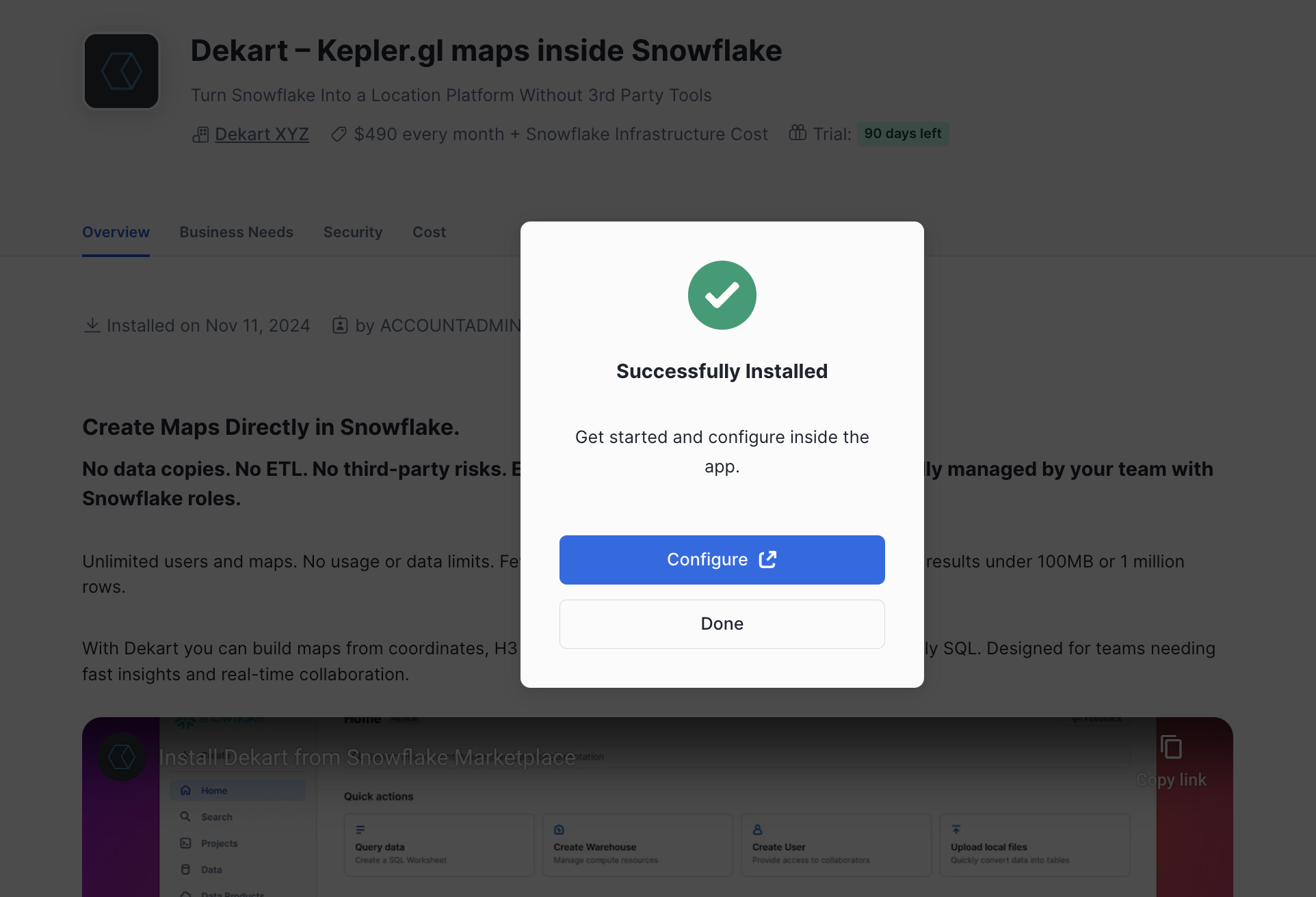

- Acquiring data from the Snowflake Marketplace

- Loading data from external storage

- Transforming data using H3 and Time Series functions

- Training models and predicting results with Cortex ML

- Using LLM for analysing textual data

- Visualizing data with Streamlit

- Processing unstructured geospaial data (GeoTIFF, Shapefiles)

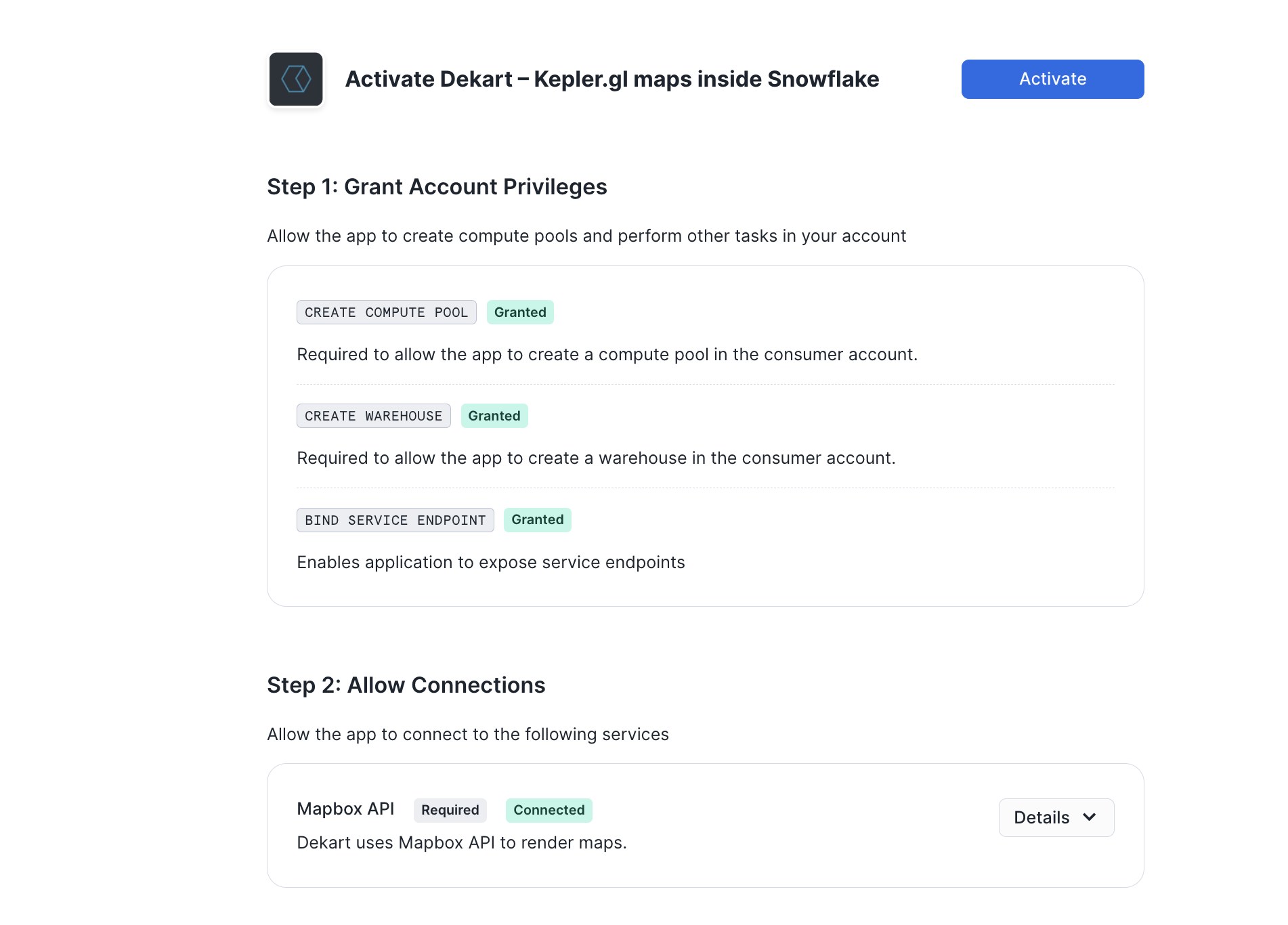

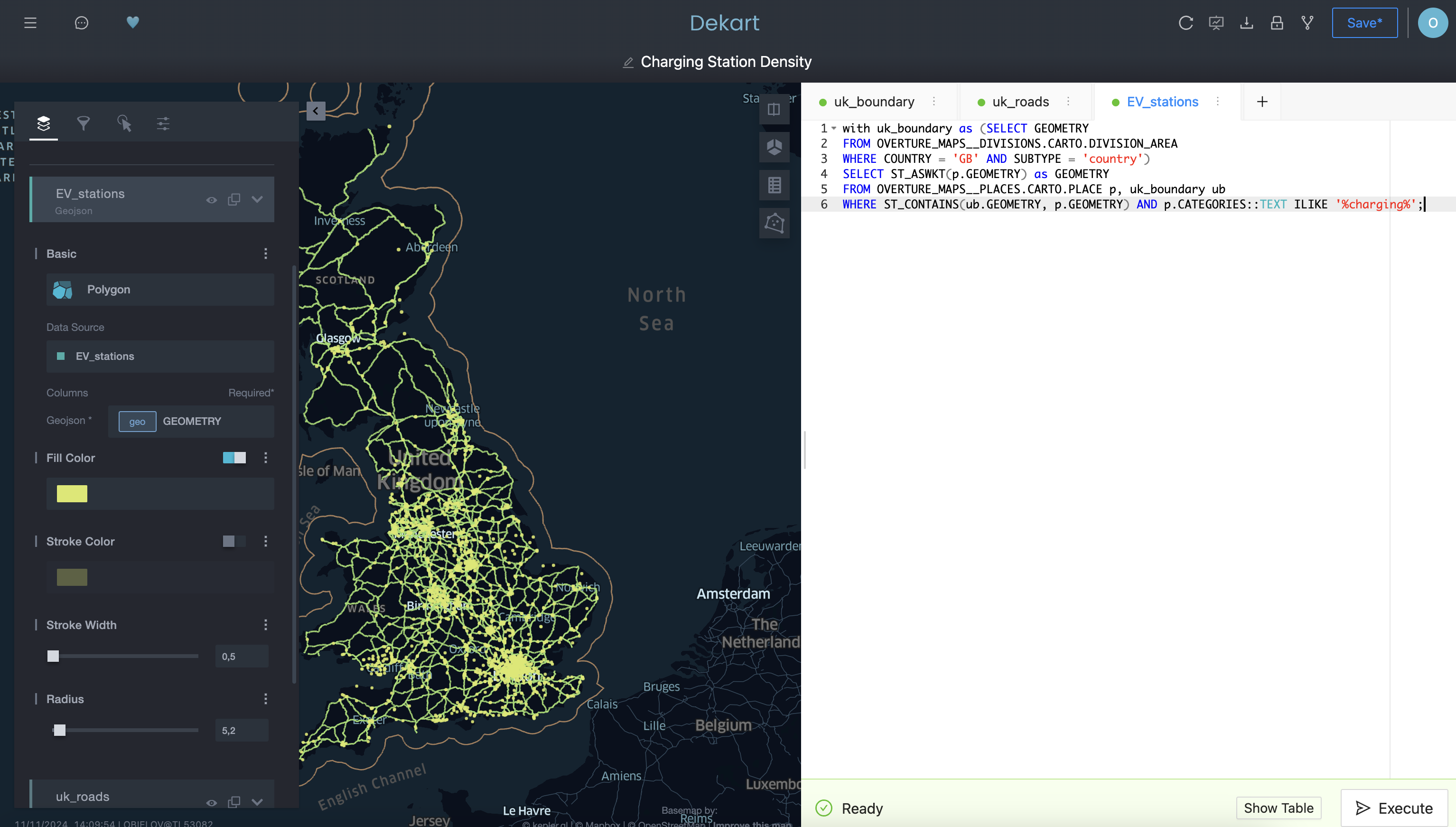

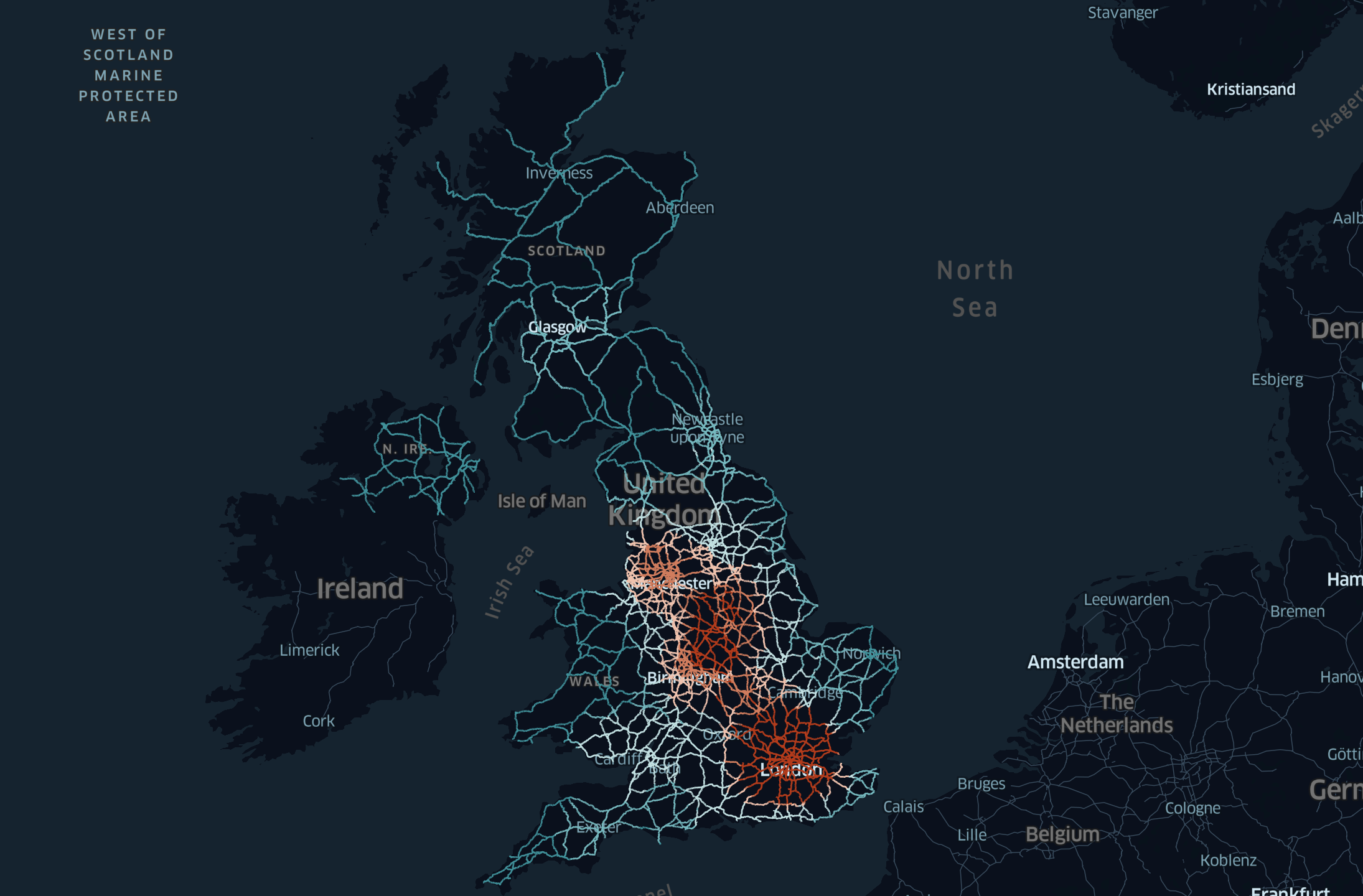

- Using geo visualisation apps available in Marketplace

- Processing unstructured geospaial data (GeoTIFF, Shapefiles)

- Using geo visualisation apps available in Marketplace

What You'll Need

- A supported Snowflake Browser

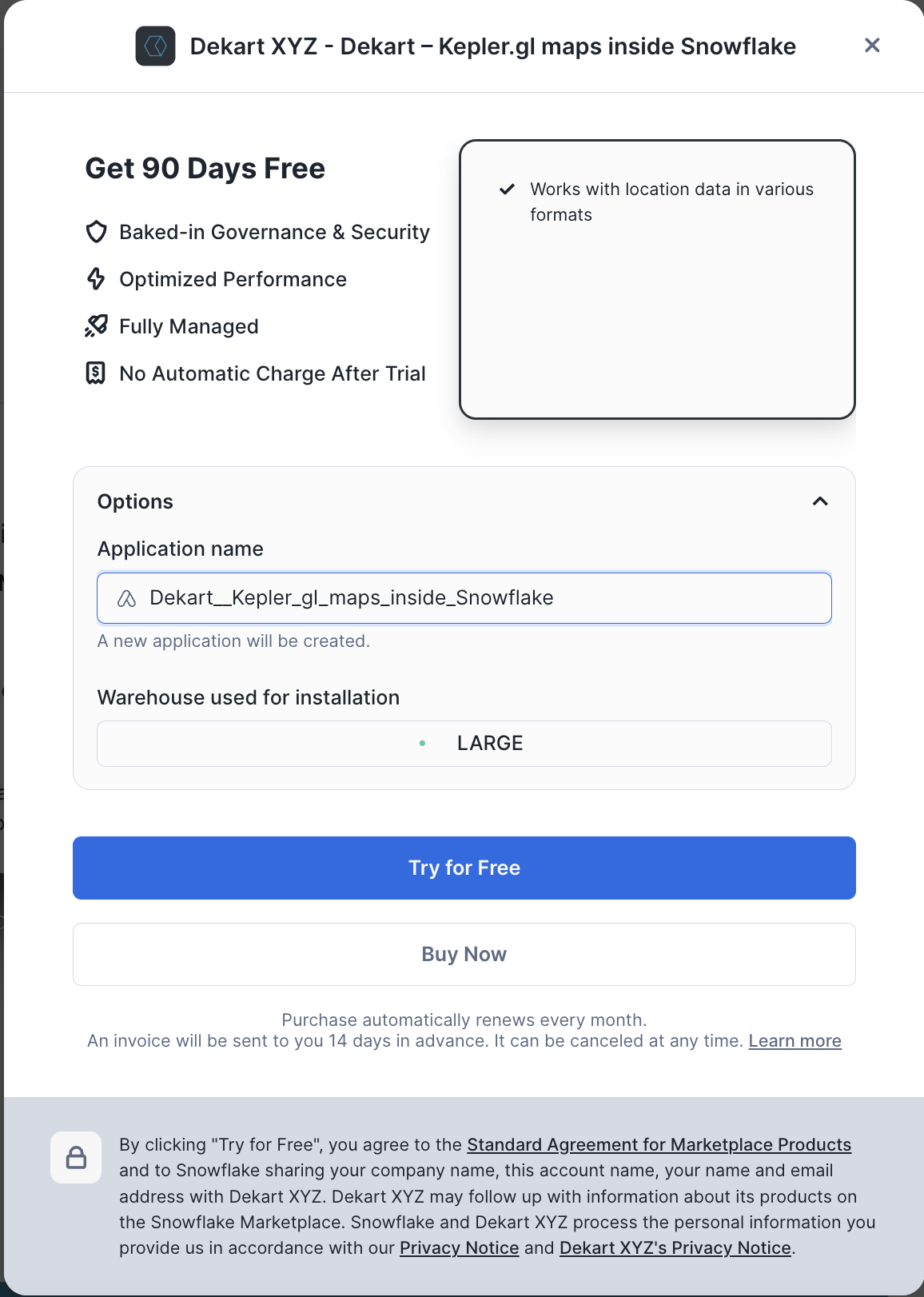

- Sign-up for a Snowflake Trial OR have access to an existing Snowflake account with the

ACCOUNTADMINrole or theIMPORT SHAREprivilege. Select the Enterprise edition, AWS as a cloud provider and US East (Northern Virginia) or EU (Frankfurt) as a region.

If this is the first time you are logging into the Snowflake UI, you will be prompted to enter your account name or account URL that you were given when you acquired a trial. The account URL contains your account name and potentially the region. You can find your account URL in the email that was sent to you after you signed up for the trial.

Click Sign-in and you will be prompted for your username and password.

Increase Your Account Permission

The Snowflake web interface has a lot to offer, but for now, switch your current role from the default SYSADMIN to ACCOUNTADMIN. This increase in permissions will allow you to create shared databases from Snowflake Marketplace listings.

Create a Virtual Warehouse

You will need to create a Virtual Warehouse to run queries.

- Navigate to the

Admin > Warehousesscreen using the menu on the left side of the window - Click the big blue

+ Warehousebutton in the upper right of the window - Create a LARGE Warehouse as shown in the screen below

Be sure to change the Suspend After (min) field to 5 min to avoid wasting compute credits.

Navigate to the query editor by clicking on Worksheets on the top left navigation bar and choose your warehouse.

- Click the + Worksheet button in the upper right of your browser window. This will open a new window.

- In the new Window, make sure

ACCOUNTADMINandMY_WH(or whatever your warehouse is named) are selected in the upper right of your browser window.

Create a new database and schema where you will store datasets in the GEOGRAPHY data type. Copy & paste the SQL below into your worksheet editor, put your cursor somewhere in the text of the query you want to run (usually the beginning or end), and either click the blue "Play" button in the upper right of your browser window, or press CTRL+Enter or CMD+Enter (Windows or Mac) to run the query.

CREATE DATABASE IF NOT EXISTS advanced_analytics;

// Set the working database schema

USE ADVANCED_ANALYTICS.PUBLIC;

USE WAREHOUSE my_wh;

ALTER SESSION SET GEOGRAPHY_OUTPUT_FORMAT='WKT';

1. Overview

Geospatial query capabilities in Snowflake are built upon a combination of data types and specialized query functions that can be used to parse, construct, and perform calculations on geospatial objects. Additionally, geospatial data can be visualized in Snowflake using Streamlit. This guide provides an entry-level introduction to geospatial analytics and visualization in Snowflake. In this lab, you will explore a sample use case of identifying the closest healthcare facilities near a geographic point, and you will learn:

- How to view the GEOGRAPHY data type with supported formats

- How to construct a geospatial object from latitude and longitude values

- How to extract latitude and longitude from a geography column

- How to perform geospatial calculations and filtering

- How to visualize geospatial data using Streamlit in Snowflake

2. Acquire Data

For this lab oyu will use Overture Maps - Places dataset from Marketplace. Now you can acquire sample geospatial data from the Snowflake Marketplace.

- Navigate to the Marketplace screen using the menu on the left side of the window

- Search for

Overture Mapsin the search bar - Find and click the

Overture Maps - Placestile

On the Get Data screen, keep the default database name OVERTURE_MAPS__PLACES, as all of the future instructions will assume this name for the database.

Congratulations! You have just created a shared database from a listing on the Snowflake Marketplace.

As one additional preparation step you need to complete is to import libraries that you will use in this Lab, navigate to the Packages drop-down in the upper right of the Notebook and search for pydeck. Click on pydeck to add it to the Python packages.

3. Understanding Snowflake Geospatial Formats

Snowflake supports GeoJSON, Well-Known Text (WKT) and Well-Known Binary (WKB) formats for loading and unloading geospatial data. You can use session or account parameters to control which of these format types is used to display geospatial data in your query results.

Run the query below to explicitly set your geography output format to JSON.

ALTER SESSION SET GEOGRAPHY_OUTPUT_FORMAT = 'GEOJSON';

In the following two queries you will familiarize yourself with Overture Maps - Points of Interest data. First, check the size of the table:

SELECT COUNT(*) FROM OVERTURE_MAPS__PLACES.CARTO.PLACE;

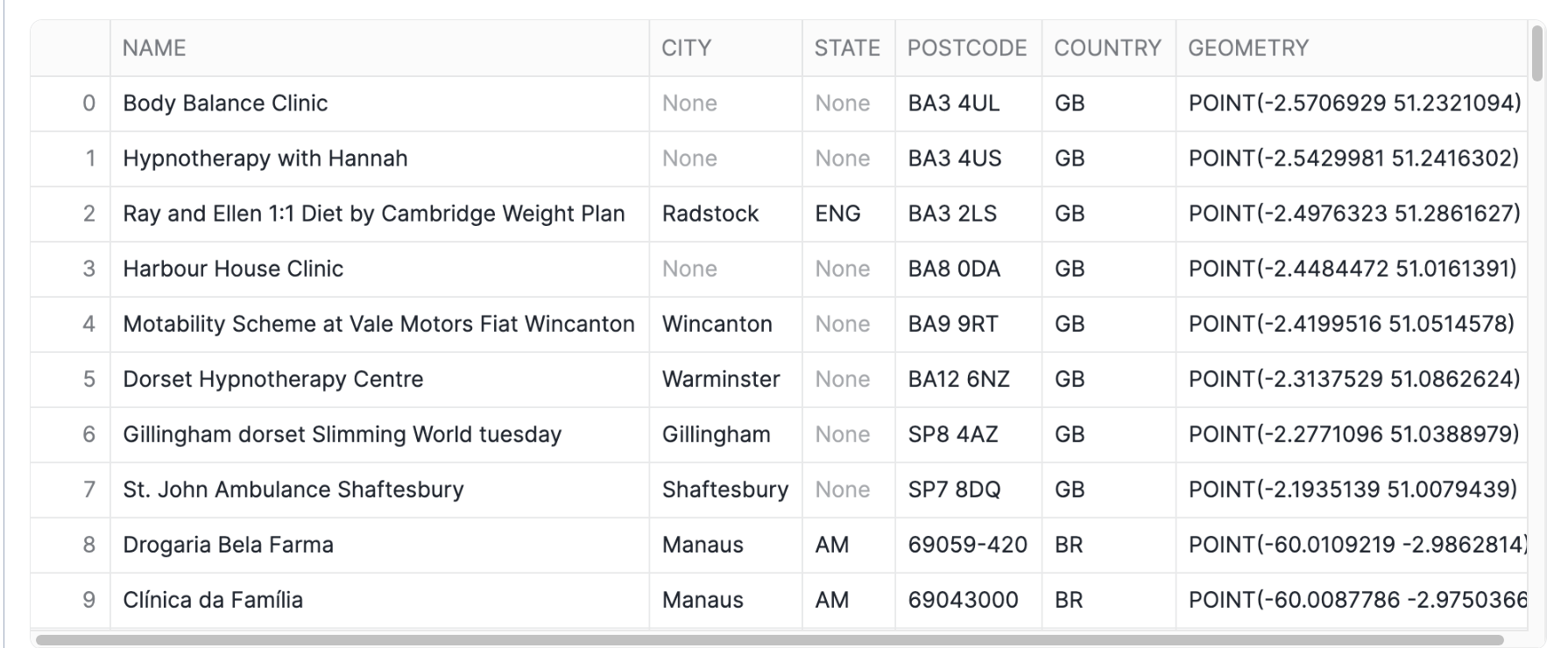

In the following query, you will examine a geography column containing data on health and medical facilities.

SELECT

NAMES['primary']::STRING AS NAME,

ADDRESS.value:element:locality::STRING AS CITY,

ADDRESS.value:element:region::STRING AS STATE,

ADDRESS.value:element:postcode::STRING AS POSTCODE,

ADDRESS.value:element:country::STRING AS COUNTRY,

GEOMETRY

FROM OVERTURE_MAPS__PLACES.CARTO.PLACE,

LATERAL FLATTEN(INPUT => ADDRESSES:list) AS ADDRESS

WHERE CATEGORIES['primary'] ='health_and_medical'

LIMIT 100;

Note that while the column is named GEOMETRY in this data source, it is stored in a GEOGRAPHY column in Snowflake, using the coordinate system ESPG:4326, also known as WGS 84. This coordinate system uses latitude and longitude as coordinates and is the most widely used coordinate system worldwide. If you are storing geospatial data using latitude and longitude, then the GEOGRAPHY data type is the most suitable for storing your data.

The contents of the GEOMETRY column in the output above, formatted as GeoJSON.

Run the code below to update your session geography output format to Well-Known Text (WKT), which is arguably more readable.

ALTER SESSION SET GEOGRAPHY_OUTPUT_FORMAT = 'WKT';

Now rerun the Overture maps query. Notice how the contents of the GEOMETRY column are displayed.

SELECT

NAMES['primary']::STRING AS NAME,

ADDRESS.value:element:locality::STRING AS CITY,

ADDRESS.value:element:region::STRING AS STATE,

ADDRESS.value:element:postcode::STRING AS POSTCODE,

ADDRESS.value:element:country::STRING AS COUNTRY,

geometry

FROM OVERTURE_MAPS__PLACES.CARTO.PLACE,

LATERAL FLATTEN(INPUT => ADDRESSES:list) AS ADDRESS

WHERE CATEGORIES['primary'] ='health_and_medical'

LIMIT 100;

Constructing geospatial objects

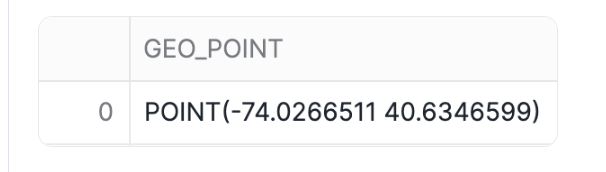

You can use constructor functions such as ST_MAKEPOINT, ST_MAKELINE and ST_POLYGON to create geospatial objects. Run the code below to create a geo point from latitude and longitude.

SELECT ST_MAKEPOINT(-74.0266511, 40.6346599) GEO_POINT

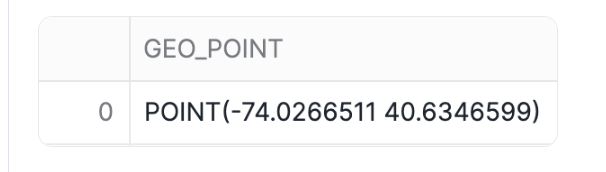

Alternatively, you can use the TO_GEOGRAPHY constructor function to create geospatial values. TO_GEOGRAPHY is a general purpose constructor where ST_MAKEPOINT specifically makes a POINT object. Run the code below:

SELECT TO_GEOGRAPHY('POINT(-74.0266511 40.6346599)') GEO_POINT

4. Visualizing spatial data in Streamlit

Using Streamlit, you can visualize your data using tools like st.map or popular python packages like pydeck.

Add a new Python cell and run the code below to see how you can use st.map to show a point on a map.

import streamlit as st

import pandas as pd

# Define the coordinates for the point

latitude = 40.755702

longitude = -73.986226

# Create a DataFrame with the point

data = pd.DataFrame({

'lat': [latitude],

'lon': [longitude]

})

# Display the map with the point

st.title("Display a Points with st.map")

st.map(data)

Accessing coordinates of a geospatial object

Sometimes you need to do the opposite - access individual coordinates in a geospatial object. You can do that with accessor functions ST_X and ST_Y to access longitude and latitude accordingly. Run the code below:

SELECT

NAMES['primary']::STRING AS NAME,

ST_X(GEOMETRY) AS LONGITUDE,

ST_Y(GEOMETRY) AS LATITUDE,

FROM OVERTURE_MAPS__PLACES.CARTO.PLACE,

LATERAL FLATTEN(INPUT => ADDRESSES:list) AS ADDRESS

WHERE CATEGORIES['primary'] ='health_and_medical'

LIMIT 100;

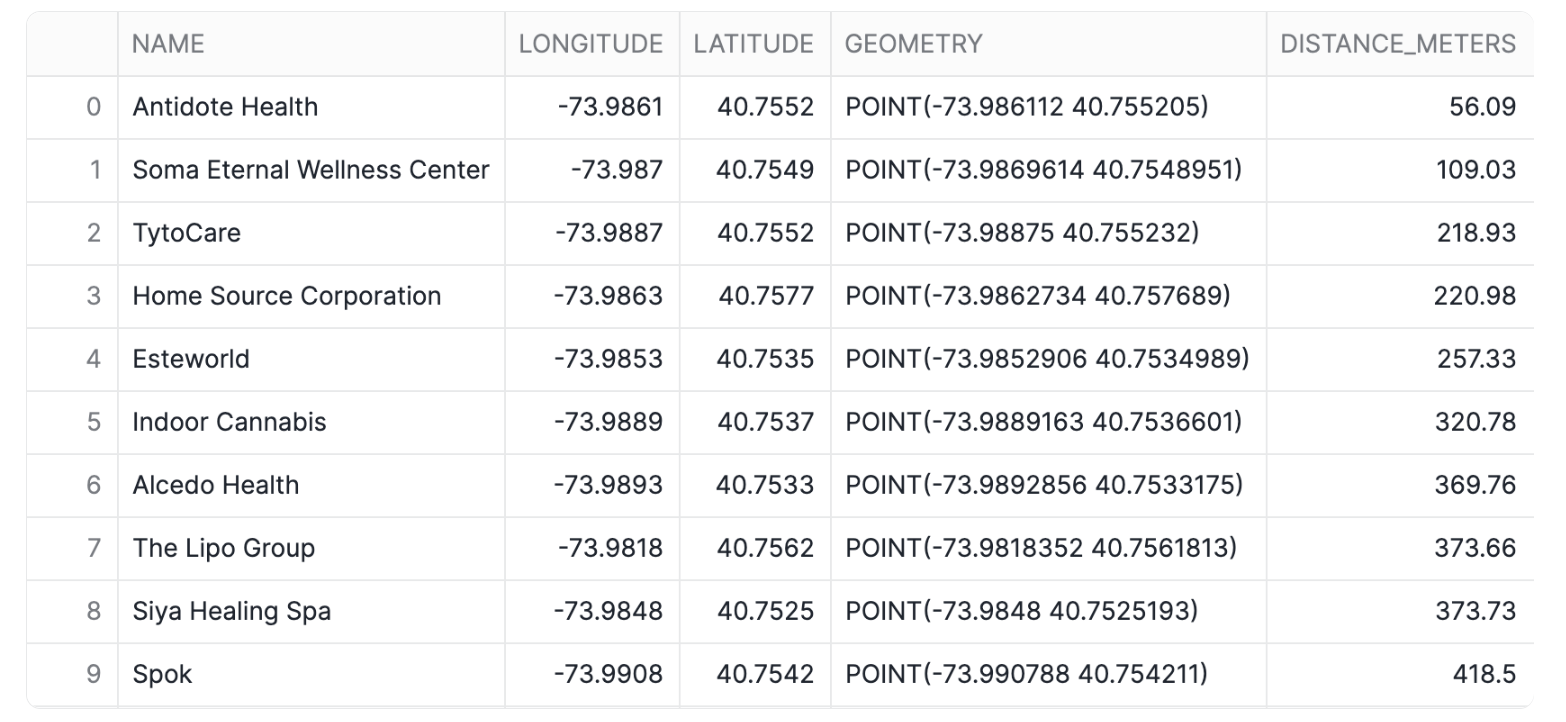

Finding the nearest points and calculating distances

You can use relationship and measurement functions to perform spatial joins and other analytical operations. For example, you can use ST_DWITHIN to find health facilities that are within a mile from you, and you can use ST_DISTANCE to measure the actual distance between points.

Run the code below to obtain the ten nearest health facilities that are no more than approximately a mile (1,600 meters) away from a given point. The records are sorted by distance.

SELECT

NAMES['primary']::STRING AS NAME,

ST_X(GEOMETRY) AS LONGITUDE,

ST_Y(GEOMETRY) AS LATITUDE,

GEOMETRY,

ST_DISTANCE(GEOMETRY,TO_GEOGRAPHY('POINT(-73.986226 40.755702)'))::NUMBER(6,2)

AS DISTANCE_METERS

FROM OVERTURE_MAPS__PLACES.CARTO.PLACE

WHERE CATEGORIES['primary'] ='health_and_medical' AND

ST_DWITHIN(GEOMETRY,ST_MAKEPOINT(-73.986226, 40.755702),1600) = TRUE

ORDER BY 5 LIMIT 10;

Notice that this query runs on a table with over 53M rows. Snowflake's geospatial data types are very efficient!

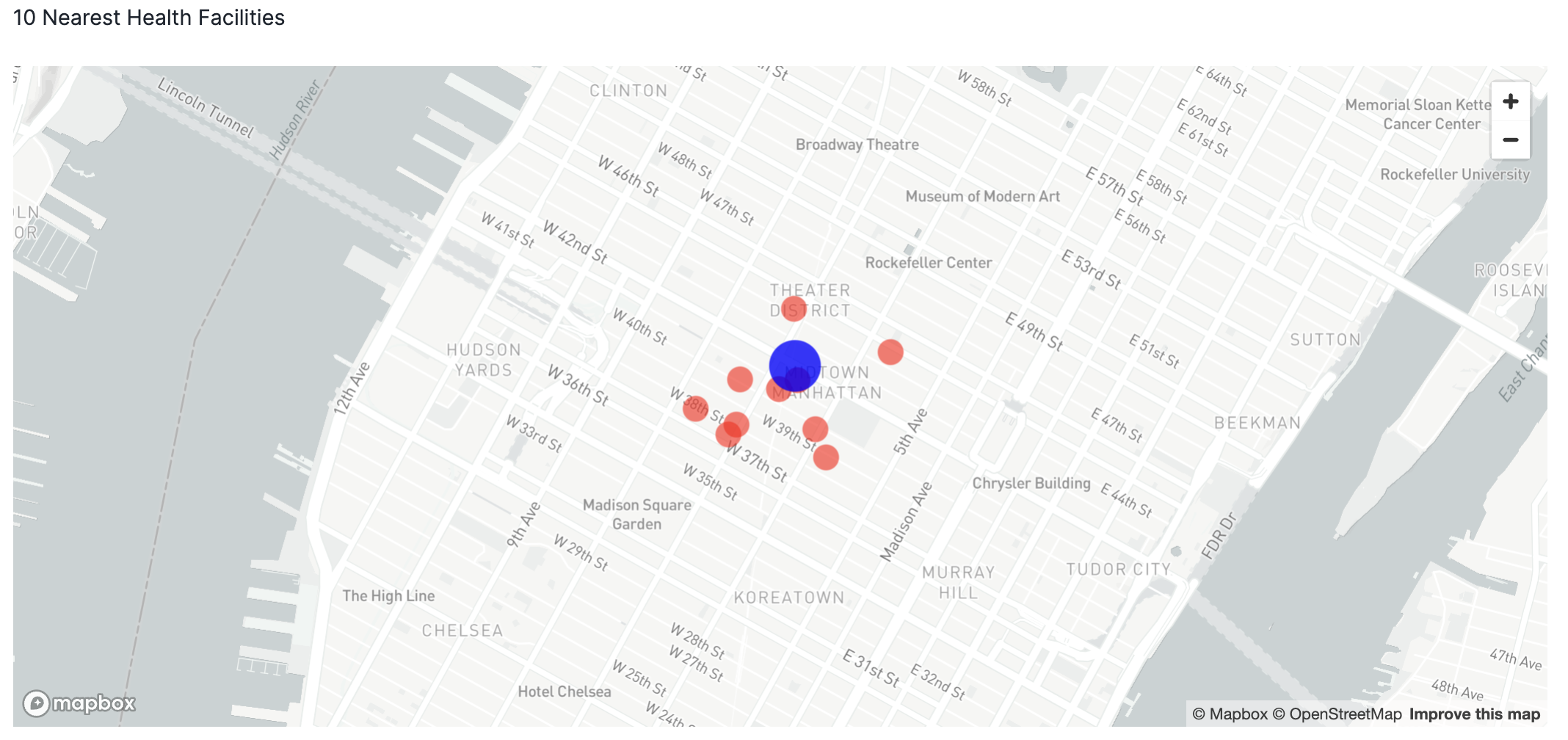

Creating multi-layered maps in Streamlit

Using Streamlit and Pydeck, you can create a multi-layered visualization.

Take note of the name of your previous cell and run the command below in a python cell to convert the results of the previous query into a pandas dataframe. We will reference this dataframe in the visualization.

df = query_9.to_pandas()

Now you will visualize the top 10 health facilities relative to the reference point. Pydeck supports multi-layered maps that can be customized with tooltips and other features.

import streamlit as st

import pandas as pd

import pydeck as pdk

# Define the coordinates for your specific location

latitude = 40.755702

longitude = -73.986226

# Create a DataFrame for your location

my_location_df = pd.DataFrame({

'lat': [latitude],

'lon': [longitude]

})

# Create a PyDeck Layer for visualizing points with larger size and a tooltip for NAME

data_layer = pdk.Layer(

"ScatterplotLayer",

df,

get_position='[LONGITUDE, LATITUDE]',

get_radius=50, # Adjust this value for larger points

get_fill_color='[255, 0, 0, 160]', # Red color with transparency

pickable=True,

get_tooltip=['NAME'], # Add NAME as a tooltip

)

# Create a PyDeck Layer for your location with a different color and size

my_location_layer = pdk.Layer(

"ScatterplotLayer",

my_location_df,

get_position='[lon, lat]',

get_radius=100, # Larger radius to highlight your location

get_fill_color='[0, 0, 255, 200]', # Blue color with transparency

pickable=True,

)

# Set the view on the map

view_state = pdk.ViewState(

latitude=df['LATITUDE'].mean(),

longitude=df['LONGITUDE'].mean(),

zoom=13.5, # Adjust zoom if needed

pitch=0,

)

# Define the tooltip

tooltip = {

"html": "<b>Facility Name:</b> {NAME}",

"style": {"color": "white"}

}

# Render the map with both layers and tooltip

r = pdk.Deck(

layers=[data_layer, my_location_layer],

initial_view_state=view_state,

map_style='mapbox://styles/mapbox/light-v10',

tooltip=tooltip

)

st.write('10 Nearest Health Facilities')

st.pydeck_chart(r, use_container_width=True)

Conclusion

Congratulations! You have completed this introductory quickstart. You learn basic operations to construct, process and visualise geospatial data.

Overview

Geospatial query capabilities in Snowflake are built upon a combination of data types and specialized query functions that can be used to parse, construct, and run calculations over geospatial objects. This guide will introduce you to the GEOMETRY data type, help you understand geospatial formats supported by Snowflake and walk you through the use of a variety of functions on sample geospatial data sets.

What You'll Learn

- How to acquire geospatial data from the Snowflake Marketplace

- How to load geospatial data from a Stage

- How to interpret the

GEOMETRYdata type and how it differs from theGEOGRAPHY - How to understand the different formats that

GEOMETRYcan be expressed in - How to do spatial analysis using the

GEOMETRYandGEOGRAPHYdata types - How to use Python UDFs for reading Shapefiles and creating custom functions

- How to visualise geospatial data using Streamlit

What You'll Build

A sample use case that involves energy grids and LTE cell towers in the Netherlands You will answer the following questions:

- What is the length of all energy grids in each municipality in the Netherlands?

- What cell towers lack electricity cables nearby?

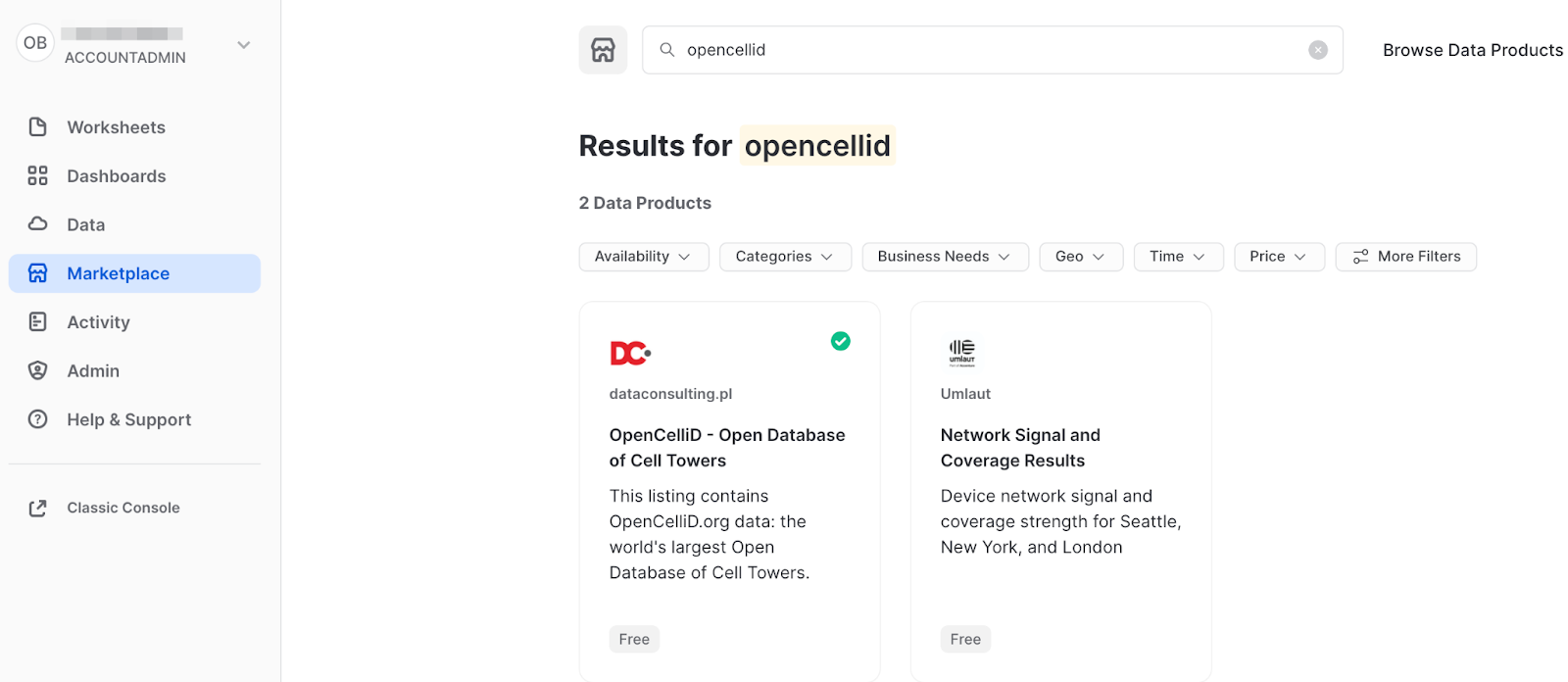

Acquire Marketplace Data and Analytics Toolbox

The first step in the guide is to acquire geospatial data sets that you can freely use to explore the basics of Snowflake's geospatial functionality. The best place to acquire this data is the Snowflake Marketplace!

- Navigate to the

Marketplacescreen using the menu on the left side of the window - Search for

OpenCelliDin the search bar - Find and click the

OpenCelliD - Open Database of Cell Towerstile or just use this link

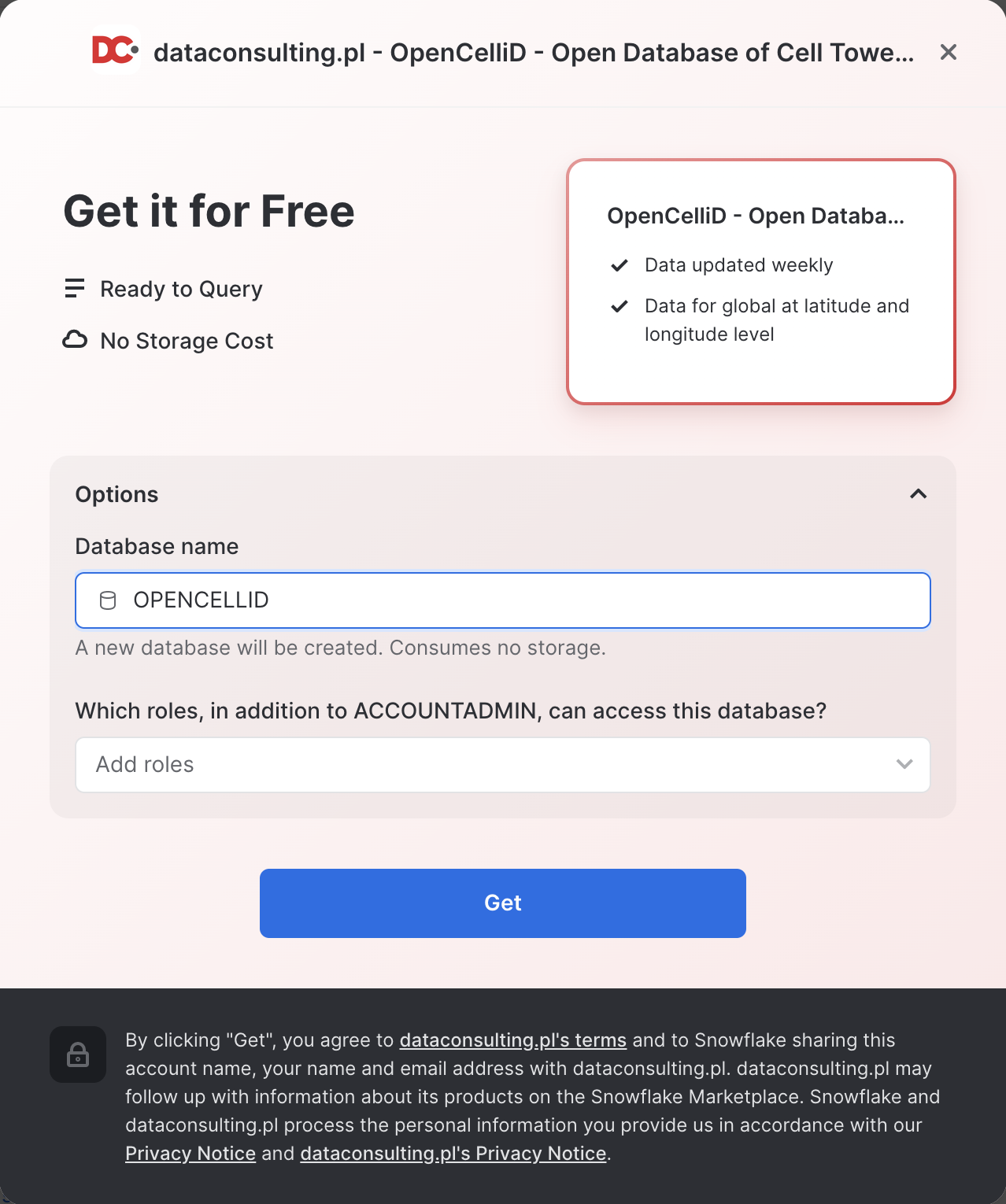

- Once in the listing, click the big blue

Getbutton

- On the

Get Datascreen, change the name of the database from the default toOPENCELLID, as this name is shorter, and all future instructions will assume this name for the database.

Similarly to the above dataset, acquire SedonaSnow application which extends Snowflake core geo features with more than 100 spatial functions. Navigate to the Marketplace screen using the menu on the left side of the window and find the SedonaSnow. Keep the the database name SEDONASNOW and optionally add more roles that can access the database.

Congratulations! You have just acquired all the listings you need for this lab.

Setup your Account

Create a new database and schema where you will store datasets in the GEOMETRY data type. Run th following SQL:

CREATE DATABASE IF NOT EXISTS GEOLAB;

CREATE SCHEMA IF NOT EXISTS GEOLAB.GEOMETRY;

USE SCHEMA GEOLAB.GEOMETRY;

Load Data from External Storage

You already understand how to get data from Marketplace, let's try another way of getting data, namely, getting it from the external S3 storage. While you loading data you will learn formats supported by geospatial data types.

For this quickstart we have prepared a dataset with energy grid infrastructure (cable lines) in the Netherlands. It is stored in the CSV format in the public S3 bucket. To import this data, create an external stage using the following SQL command:

CREATE OR REPLACE STAGE geolab.geometry.geostage

URL = 's3://sfquickstarts/vhol_spatial_analysis_geometry_geography/';

Now you will create a new table using the file from that stage. Run the following queries to create a new file format and a new table using the dataset stored in the Stage:

// Create file format

CREATE OR REPLACE FILE FORMAT geocsv TYPE = CSV SKIP_HEADER = 1 FIELD_OPTIONALLY_ENCLOSED_BY = '"';

CREATE OR REPLACE TABLE geolab.geometry.nl_cables_stations AS

SELECT to_geometry($1) AS geometry,

$2 AS id,

$3 AS type

FROM @geostage/nl_stations_cables.csv (file_format => 'geocsv');

Look at the description of the table you just created by running the following queries:

DESC TABLE geolab.geometry.nl_cables_stations;

The desc or describe command shows you the definition of the view, including the columns, their data type, and other relevant details. Notice the geometry column is defined as GEOMETRY type.

Snowflake supports 3 primary geospatial formats and 2 additional variations on those formats. They are:

- GeoJSON: a JSON-based standard for representing geospatial data

- WKT & EWKT: a "Well Known Text" string format for representing geospatial data and the "Extended" variation of that format

- WKB & EWKB: a "Well Known Binary" format for representing geospatial data in binary and the "Extended" variation of that format

These formats are supported for ingestion (files containing those formats can be loaded into a GEOMETRY typed column), query result display, and data unloading to new files. You don't need to worry about how Snowflake stores the data under the covers but rather how the data is displayed to you or unloaded to files through the value of session variables called GEOMETRY_OUTPUT_FORMAT.

Run the query below to make sure the current format is GeoJSON:

ALTER SESSION SET geometry_output_format = 'GEOJSON';

The alter session command lets you set a parameter for your current user session, which in this case is GEOMETRY_OUTPUT_FORMAT. The default value for those parameters is 'GEOJSON', so normally you wouldn't have to run this command if you want that format, but this guide wants to be certain the next queries are run with the 'GEOJSON' output.

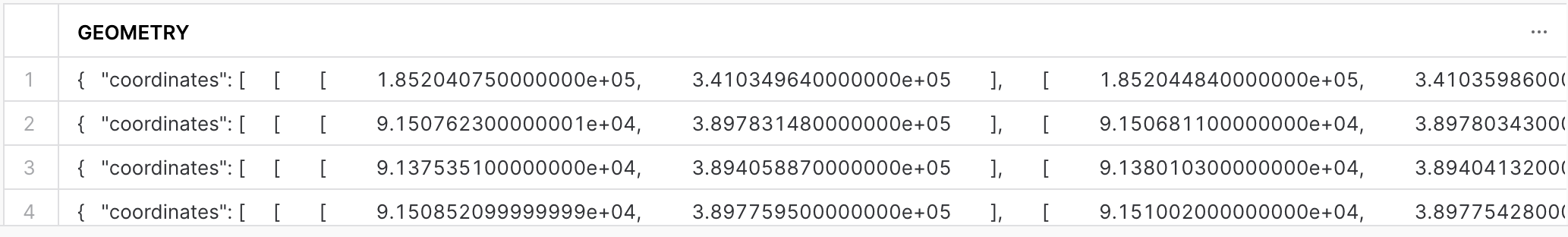

Now run the following query against the nl_cables_stations table to see energy grids in the Netherlands.

SELECT geometry

FROM nl_cables_stations

LIMIT 5;

In the result set, notice the GEOMETRY column and how it displays a JSON representation of spatial objects. It should look similar to this:

{"coordinates": [[[1.852040750000000e+05, 3.410349640000000e+05],

[1.852044840000000e+05,3.410359860000000e+05]],

[[1.852390240000000e+05,3.411219340000000e+05],

... ,

[1.852800600000000e+05,3.412219960000000e+05]] ],

"type": "MultiLineString" }

Unlike GEOGRAPHY, which treats all points as longitude and latitude on a spherical earth, GEOMETRY considers the Earth as a flat surface. More information about Snowflake's specification can be found here. In this example it uses scientific notation and the numbers are much larger than latitude and longitude boundaries [-180; 180].

Now look at the same query but in a different format. Run the following query:

ALTER SESSION SET geometry_output_format = 'EWKT';

Run the previous SELECT query again and when done, examine the output in the GEOMETRY column.

SELECT geometry

FROM nl_cables_stations

LIMIT 5;

EWKT looks different from GeoJSON, and is arguably more readable. Here you can more clearly see the geospatial object types, which are represented below in the example output:

SRID=28992;MULTILINESTRING((185204.075 341034.964,185204.484 341035.986), ... ,(185276.402 341212.688,185279.319 341220.196,185280.06 341221.996))

EWKT also shows the spatial reference identifier and in our example, you have a dataset in Amersfoort / RD New spatial reference system, that is why the displayed SRID is 28992.

Lastly, look at the WKB output. Run the following query:

ALTER SESSION SET geometry_output_format = 'WKB';

Run the query again:

SELECT geometry

FROM nl_cables_stations

LIMIT 5;

Now that you have a basic understanding of how the GEOMETRY data type works and what a geospatial representation of data looks like in various output formats, it's time to walk through a scenario that requires you to use constructors to load data. You will do it while trying one more way of getting data, namely, from the Shapefile file stored in the external stage.

One of the files in the external stage contains the polygons of administrative boundaries in the Netherlands. The data is stored in Shapefile format which is not yet supported by Snowflake. But you can load this file using Python UDF and Dynamic File Access feature. You will also use some packages available in the Snowflake Anaconda channel.

Run the following query that creates a UDF to read shapfiles:

CREATE OR REPLACE FUNCTION geolab.geometry.py_load_geodata(PATH_TO_FILE string, filename string)

RETURNS TABLE (wkt varchar, properties object)

LANGUAGE PYTHON

RUNTIME_VERSION = 3.8

PACKAGES = ('fiona', 'shapely', 'snowflake-snowpark-python')

HANDLER = 'GeoFileReader'

AS $$

from shapely.geometry import shape

from snowflake.snowpark.files import SnowflakeFile

from fiona.io import ZipMemoryFile

class GeoFileReader:

def process(self, PATH_TO_FILE: str, filename: str):

with SnowflakeFile.open(PATH_TO_FILE, 'rb') as f:

with ZipMemoryFile(f) as zip:

with zip.open(filename) as collection:

for record in collection:

yield (shape(record['geometry']).wkt, dict(record['properties']))

$$;

This UDF reads a Shapefile and returns its content as a table. Under the hood it uses geospatial libraries fiona and shapely. Run the following query to see the content of the uploaded shapefile.

ALTER SESSION SET geometry_output_format = 'EWKT';

SELECT to_geometry(wkt) AS geometry,

properties:NAME_1::string AS province_name,

properties:NAME_2::string AS municipality_name

FROM table(py_load_geodata(build_scoped_file_url(@geolab.geometry.geostage, 'nl_areas.zip'), 'nl_areas.shp'));

This query fails with the error NotebookSqlException: 100383: Geometry validation failed: Geometry has invalid self-intersections. A self-intersection point was found at (559963, 5.71069e+06). The constructor function determines if the shape is valid according to the Open Geospatial Consortium's Simple Feature Access / Common Architecture standard. If the shape is invalid, the function reports an error and does not create the GEOMETRY object. That is what happened in our example.

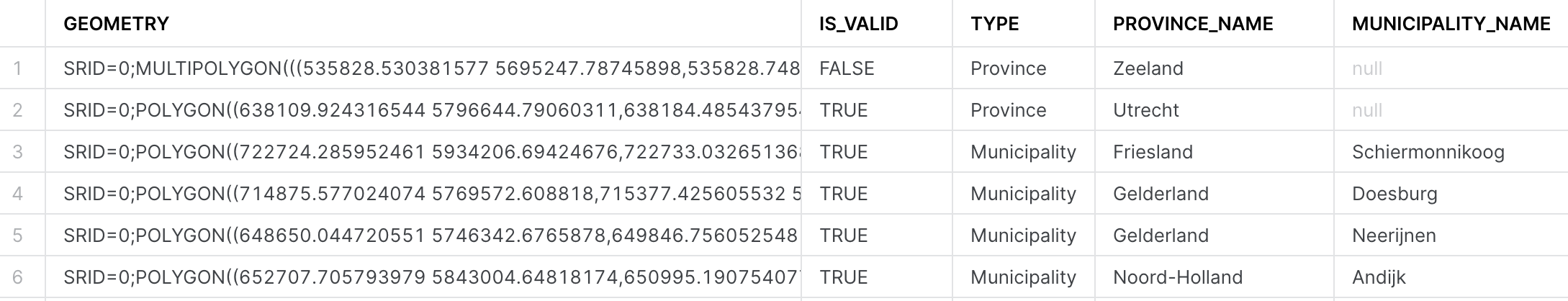

To fix this you can allow the ingestion of invalid shapes by setting the corresponding parameter to True. Let's run the SELECT statement again, but update the query to see how many shapes are invalid. Run the following query:

SELECT to_geometry(s => wkt, allowInvalid => True) AS geometry,

st_isvalid(geometry) AS is_valid,

properties:NAME_1::string AS province_name,

properties:NAME_2::string AS municipality_name

FROM table(py_load_geodata(build_scoped_file_url(@geolab.geometry.geostage, 'nl_areas.zip'), 'nl_areas.shp'))

ORDER BY is_valid ASC;

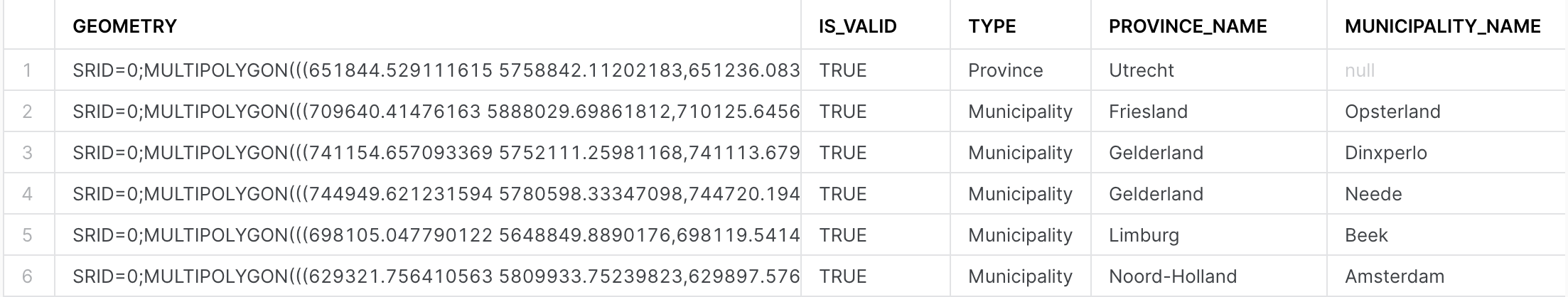

This query completed without error and now you see that the shape of the province Zeeland is invalid. Let's repair it by applying the ST_MakeValid function from SedonaSnow Native app:

SELECT SEDONASNOW.SEDONA.st_MakeValid(to_geometry(s => wkt, allowInvalid => True)) AS geometry,

st_isvalid(geometry) AS is_valid,

(CASE WHEN properties:TYPE_1::string IS NULL THEN 'Municipality' ELSE 'Province' END) AS type,

properties:NAME_1::string AS province_name,

properties:NAME_2::string AS municipality_name

FROM table(py_load_geodata(build_scoped_file_url(@geolab.geometry.geostage, 'nl_areas.zip'), 'nl_areas.shp'))

ORDER BY is_valid ASC;

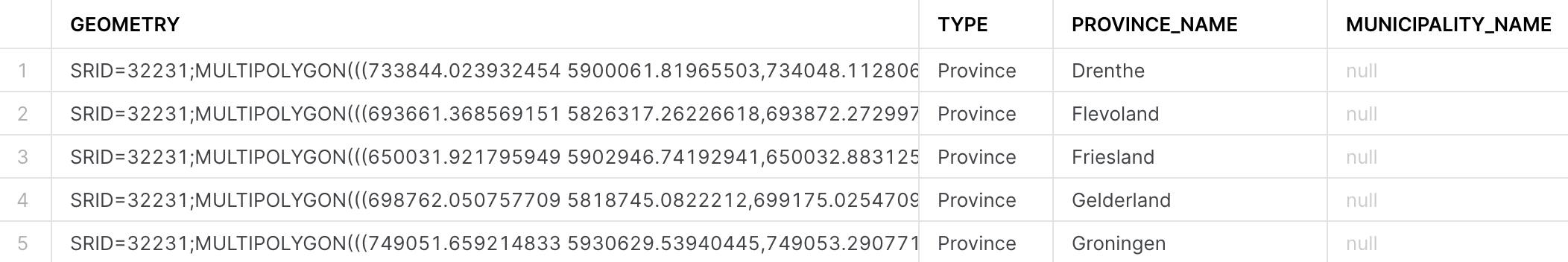

Now all shapes are valid and the data is ready to be ingested. One additional thing you should do is to set SRID, since otherwise it will be set to 0. This dataset is in the reference system WGS 72 / UTM zone 31N, so it makes sense to add the SRID=32231 to the constructor function.

Run the following query:

CREATE OR REPLACE TABLE geolab.geometry.nl_administrative_areas AS

SELECT ST_SETSRID(SEDONASNOW.SEDONA.ST_MakeValid(to_geometry(s => wkt, srid => 32231, allowInvalid => True)), 32231) AS geometry,

st_isvalid(geometry) AS is_valid,

(CASE WHEN properties:TYPE_1::string IS NULL THEN 'Municipality' ELSE 'Province' END) AS type,

properties:NAME_1::string AS province_name,

properties:NAME_2::string AS municipality_name

FROM table(py_load_geodata(build_scoped_file_url(@geolab.geometry.geostage, 'nl_areas.zip'), 'nl_areas.shp'))

ORDER BY is_valid ASC;

Excellent! Now that all the datasets are successfully loaded, let's proceed to the next exciting step: the analysis.

Energy grids Analysis

To showcase the capabilities of the GEOMETRY data type, you will explore several use cases. In these scenarios, you'll assume you are an analyst working for an energy utilities company responsible for maintaining electrical grids.

What is the length of the electricity cables?

In the first use case you will calculate the length of electrical cables your organization is responsible for in each administrative area within the Netherlands. You'll be utilizing two datasets: with power infrastructure of the Netherlands and the borders of Dutch administrative areas. First, let's check the sample of each dataset.

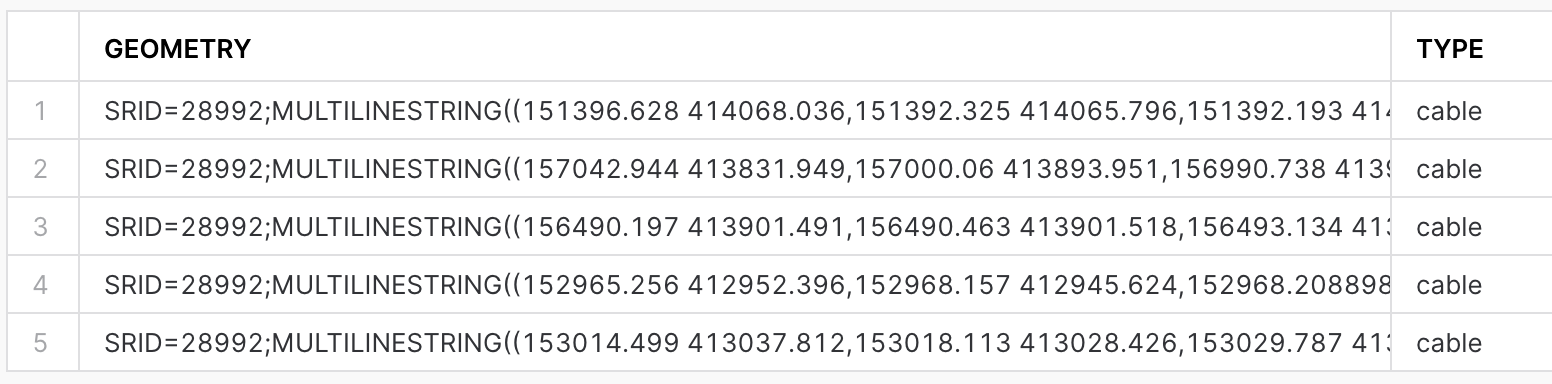

Run the following query to see the content of nl_cables_stations table:

SELECT geometry, type

FROM geolab.geometry.nl_cables_stations

LIMIT 5;

The spatial data is stored using the GEOMETRY data type and employs the Dutch mapping system, Amersfoort / RD New (SRID = 28992).

To view the contents of the table containing the boundaries of the administrative areas in the Netherlands, execute the following query:

SELECT *

FROM geolab.geometry.nl_administrative_areas

LIMIT 5;

In order to compute the length of all cables per administrative area, it's essential that both datasets adhere to the same mapping system. You have two options: either project nl_administrative_areas to SRID 28992, or project nl_cables_stations to SRID 32231. For this exercise, let's choose the first option. Run the following query:

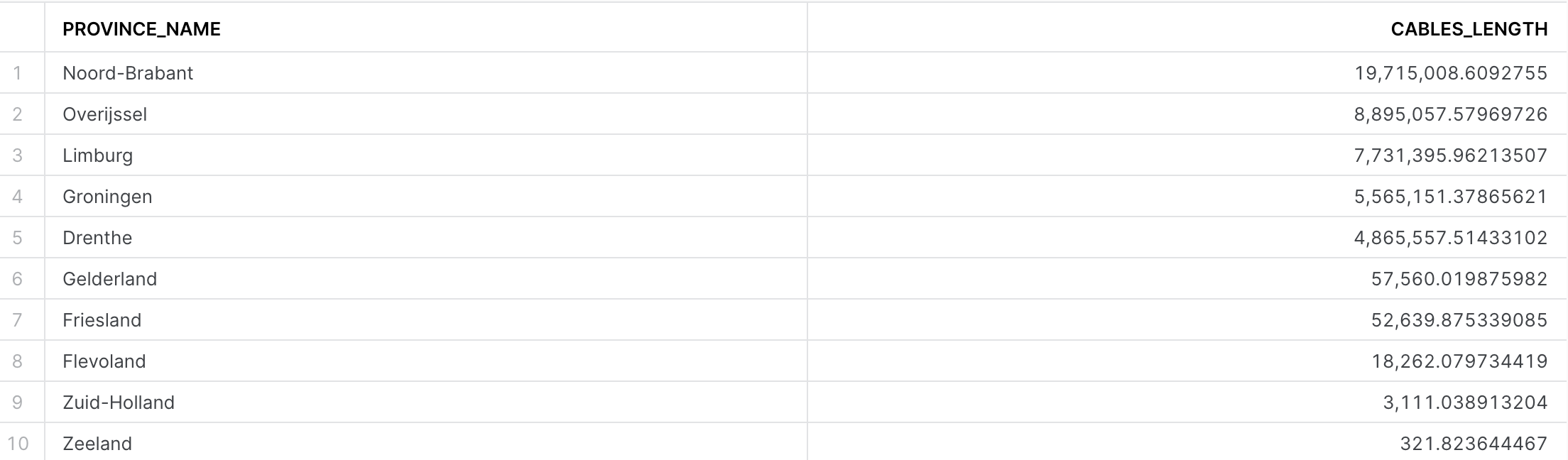

SELECT t1.province_name,

sum(st_length(t2.geometry)) AS cables_length

FROM geolab.geometry.nl_administrative_areas AS t1,

geolab.geometry.nl_cables_stations AS t2

WHERE st_intersects(st_transform(t1.geometry, 28992), t2.geometry)

AND t1.type = 'Province'

GROUP BY 1

ORDER BY 2 DESC;

You have five areas densely covered by electricity cables, those are the ones that your company is responsible for. For your first analysis, you will focus on these areas.

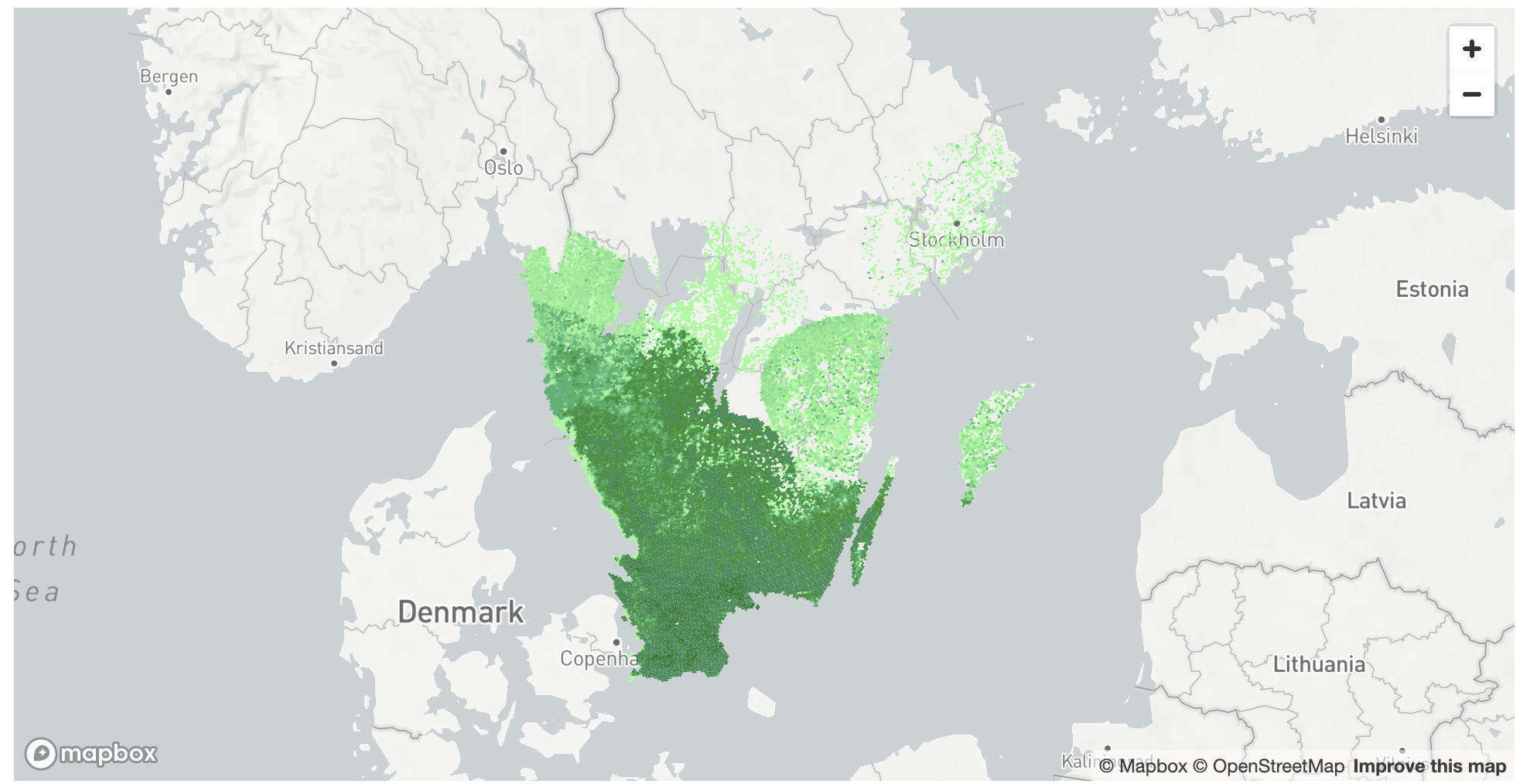

What cell towers lack electricity cables nearby?

In many areas, especially rural or remote ones, cell towers might be located far from electricity grids. This can pose a challenge in providing a reliable power supply to these towers. They often rely on diesel generators, which can be expensive to operate and maintain and have environmental implications. Furthermore, power outages can lead to disruptions in mobile connectivity, impacting individuals, businesses, and emergency services.

Our analysis aims to identify mobile cell towers that are not near an existing electricity grid. This information could be used to prioritize areas for grid expansion, to improve the efficiency of renewable energy source installations (like solar panels or wind turbines), or to consider alternative energy solutions.

For this and the next examples let's use GEOGRAPHY data type as it can be easily visualized using CARTO. As a first step, let's create GEOGRAPHY equivalents for the energy grids and boundaries tables. For that you need to project the geometry column in each of the tables into the mapping system WGS 84 (SRID=4326) and then convert to GEOGRAPHY data type. Run the following queries that create new tables and enable search optimization for each of them in order to increase the performance of spatial operations.

// Creating a table with GEOGRAPHY for nl_administrative_areas

CREATE SCHEMA IF NOT EXISTS GEOLAB.GEOGRAPHY;

CREATE OR REPLACE TABLE geolab.geography.nl_administrative_areas AS

SELECT to_geography(st_transform(geometry, 4326)) AS geom,

type,

province_name,

municipality_name

FROM geolab.geometry.nl_administrative_areas

ORDER BY st_geohash(geom);

// Creating a table with GEOGRAPHY for nl_cables_stations

CREATE OR REPLACE TABLE geolab.geography.nl_cables_stations AS

SELECT to_geography(st_transform(geometry, 4326)) AS geom,

id,

type

FROM geolab.geometry.nl_cables_stations

ORDER BY st_geohash(geom);

Now you will create a table with locations of cell towers stored as GEOGRAPHY, just like for the previous two tables. Run the following query:

CREATE OR REPLACE TABLE geolab.geography.nl_lte AS

SELECT DISTINCT st_point(lon, lat) AS geom,

cell_range

FROM OPENCELLID.PUBLIC.RAW_CELL_TOWERS t1

WHERE mcc = '204' -- 204 is the mobile country code in the Netherlands

AND radio='LTE'

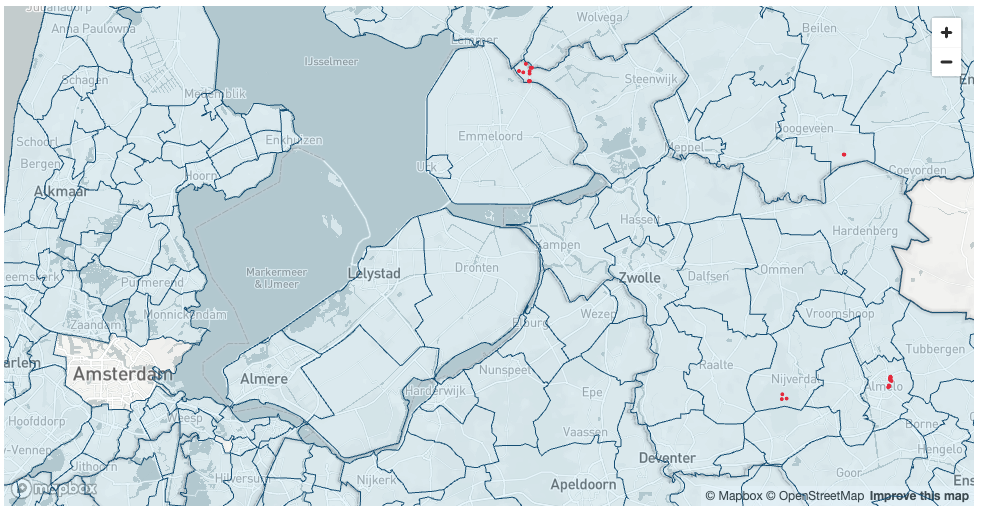

Finally, you will find all cell towers that don't have an energy line within a 2-kilometer radius. For each cell tower you'll calculate the distance to the nearest electricity cable. You will use Streamlit library pydeck to visualise municipalities and locations of cell towers.

You can create visualisation either in Notebooks or as a Strealit app. As a preparation step you need to import pydeck library that you will use in this Lab. Navigate to the Packages drop-down in the upper right of the Notebook (upper left of the Streamlit app) and search for pydeck. Click on pydeck to add it to the Python packages. Then run the following Python code:

import streamlit as st

import pandas as pd

import pydeck as pdk

import json

from snowflake.snowpark.context import get_active_session

session = get_active_session()

def get_celltowers() -> pd.DataFrame:

return session.sql(f"""

SELECT province_name,

cells.geom

FROM geolab.geography.nl_lte cells

LEFT JOIN geolab.geography.nl_cables_stations cables

ON st_dwithin(cells.geom, cables.geom, 2000)

JOIN geolab.geography.nl_administrative_areas areas

ON st_contains(areas.geom, cells.geom)

WHERE areas.type = 'Municipality'

AND areas.province_name in ('Noord-Brabant', 'Overijssel', 'Limburg', 'Groningen', 'Drenthe')

AND cables.geom IS NULL; """).to_pandas()

def get_boundaries() -> pd.DataFrame:

return session.sql(f"""

SELECT st_simplify(GEOM, 10) as geom, municipality_name

FROM geolab.geography.nl_administrative_areas

WHERE type = 'Municipality';

""").to_pandas()

boundaries = get_boundaries()

boundaries["coordinates"] = boundaries["GEOM"].apply(lambda row: json.loads(row)["coordinates"][0])

celltowers = get_celltowers()

celltowers["lon"] = celltowers["GEOM"].apply(lambda row: json.loads(row)["coordinates"][0])

celltowers["lat"] = celltowers["GEOM"].apply(lambda row: json.loads(row)["coordinates"][1])

layer_celltowers = pdk.Layer(

"ScatterplotLayer",

celltowers,

get_position=["lon", "lat"],

id="celltowers",

stroked=True,

filled=True,

extruded=False,

wireframe=True,

get_fill_color=[233, 43, 65],

get_line_color=[233, 43, 65],

get_radius=300,

auto_highlight=True,

pickable=False,

)

layer_boundaries = pdk.Layer(

"PolygonLayer",

data=boundaries,

id="province-layer",

get_polygon="coordinates",

extruded=False,

opacity=0.9,

wireframe=True,

pickable=True,

stroked=True,

filled=True,

line_width_min_pixels=1,

get_line_color=[17, 86, 127], # Red color for the border

get_fill_color=[43, 181, 233, 30], # Blue fill with transparency

coverage=1

)

st.pydeck_chart(pdk.Deck(

map_style=None,

initial_view_state=pdk.ViewState(

latitude=51.97954426323304,

longitude=5.626041932127842,

# pitch=45,

zoom=8),

tooltip={

'html': '<b>Province name:</b> {MUNICIPALITY_NAME}',

'style': {

'color': 'white'

}

},

layers=[layer_boundaries, layer_celltowers],

))

Another way to visualise geospatial data is using open-source geo analytics tool QGIS. Do the following steps:

- Install the latest Long Term Version of QGIS

- Install Snowflake conector. Go to

Plugins>All, search forSnowflake Connector for QGISand clickInstall Plugin. - Go to

Layer>Data Source Managerand create a new connection to Snowflake. Call itSNOWFLAKE(all letters capital). Check the documentation to learn mor on how to create new coonection - Download a QGIS project that we created for you and open it in QGIS.

- If previous steps done correctly, you should be able to see the following layers in QGIS

ENERGY_GRIDS(LINESTRING and MULTILINESTRING) - energy frids for Noord-Brabant, Overijssel, Limburg, Groningen, and Drenthe.CELL_TOWERS_WITHOUT_CABLES- cell towers in the regions above that don't have energy grids within radius of 2km.Municipalities(POLYGON and MULTIPOLYGON) - Boundaries of Dutch municipalities.

Conclusion

In this guide, you acquired geospatial data from the Snowflake Marketplace, explored how the GEOMETRY data type works and how it differs from GEOGRAPHY. You converted one data type into another and queried geospatial data using parser, constructor, transformation, and used geospatial joins. You then saw how geospatial objects could be visualized using CARTO.

You are now ready to explore the larger world of Snowflake geospatial support and geospatial functions.

What we've covered

- How to acquire a shared database from the Snowflake Marketplace and from External and internal storages.

- The GEOMETRY data type, its formats GeoJSON, WKT, EWKT, WKB, and EWKB, and how to switch between them.

- How to use constructors like TO_GEOMETRY, ST_MAKELINE.

- How to reproject between SRIDs using ST_TRANSFORM.

- How to perform relational calculations like ST_DWITHIN and ST_INTERSECTS.

- How to perform measurement calculations like ST_LENGTH.

- How to use Python UDFs for reading Shapefiles and creating custom functions.

- How to visualise geospatial data using Streamlit and QGIS

In this lab, we will demonstrate how to perform geocoding and reverse geocoding using datasets and applications from the Marketplace. You will learn how to:

- Perform address cleansing

- Convert an address into a location (geocoding)

- Convert a location into an address (reverse geocoding)

For the most precise and reliable geocoding results, we recommend using specialized services like Mapbox or TravelTime. While the methods described in this Lab can be useful, they may not always achieve the highest accuracy, especially in areas with sparse data or complex geographic features. If your application demands extremely precise geocoding, consider investing in a proven solution with guaranteed accuracy and robust support.

However, many companies seek cost-effective solutions for geocoding large datasets. In such cases, supplementing specialized services with free datasets can be a viable approach. Datasets provided by the Overture Maps Foundation or OpenAddresses can be valuable resources for building solutions that are "good enough", especially when some accuracy can be compromised in favor of cost-efficiency. It's essential to evaluate your specific needs and constraints before selecting a geocoding approach.

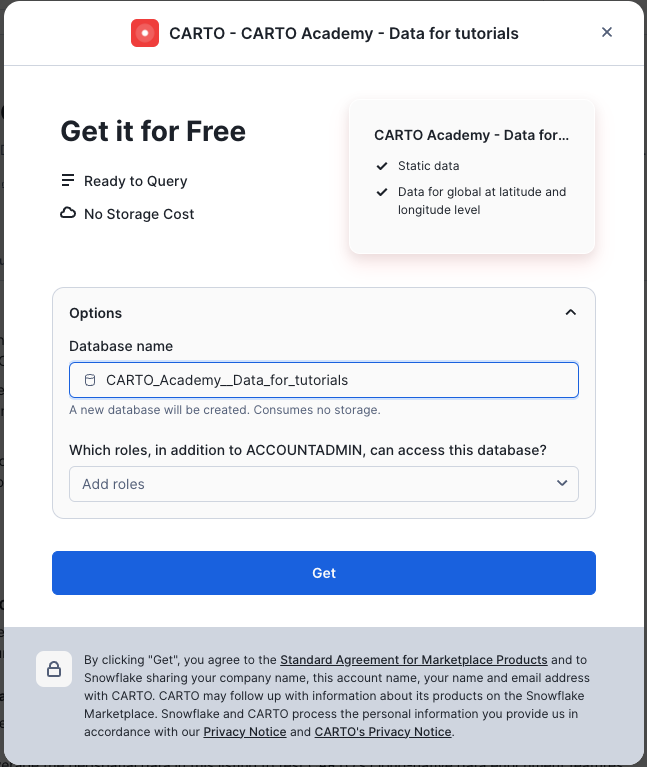

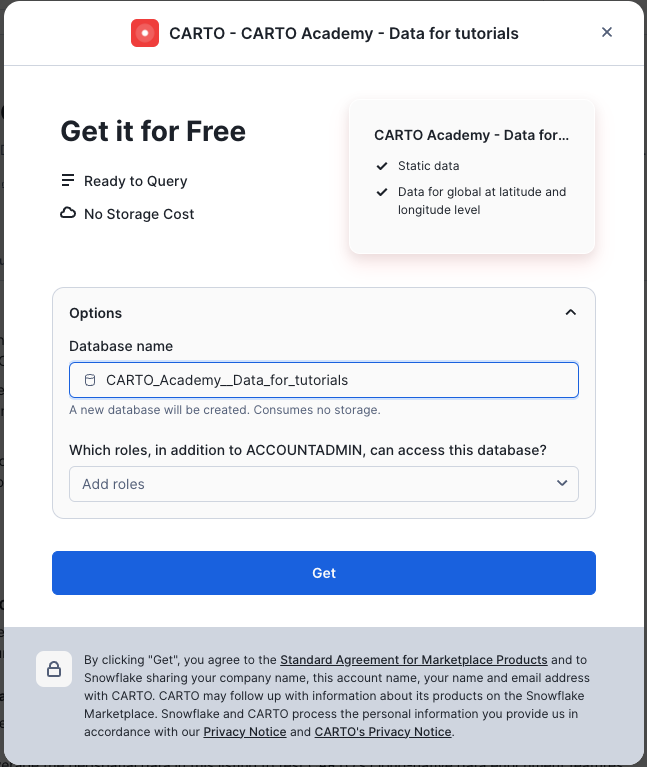

Step 1. Data acquisition

For this project you will use a dataset with locations of restaurants and cafes in Berlin from the CARTO Academy Marketplace listing.

- Navigate to the

Marketplacescreen using the menu on the left side of the window - Search for

CARTO Academyin the search bar - Find and click the

CARTO Academy - Data for tutorialstile - Once in the listing, click the big blue

Getbutton

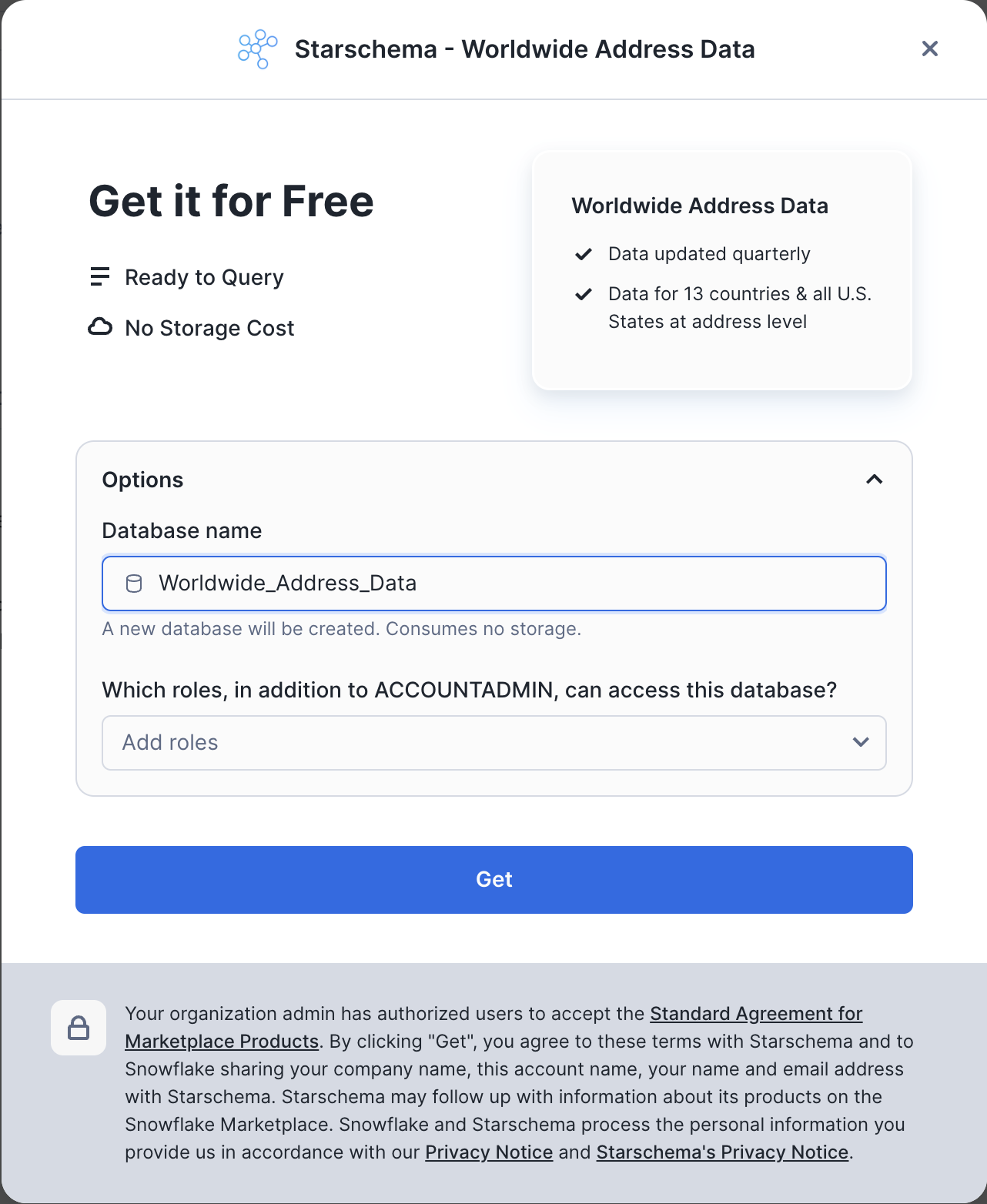

Another dataset that you will use in this Lab is Worldwide Address Data and you can also get it from the Snowflake Marketplace. It's a free dataset from the OpenAddresses project that allows Snowflake customers to map lat/long information to address details.

- Search for

Worldwide Address Datain the search bar - Find and click on the corresponding dataset from Starschema

- On the

Get Datascreen, don't change the name of the database fromWORLDWIDE_ADDRESS_DATA.

Nice! You have just got two listings that you will need for this project.

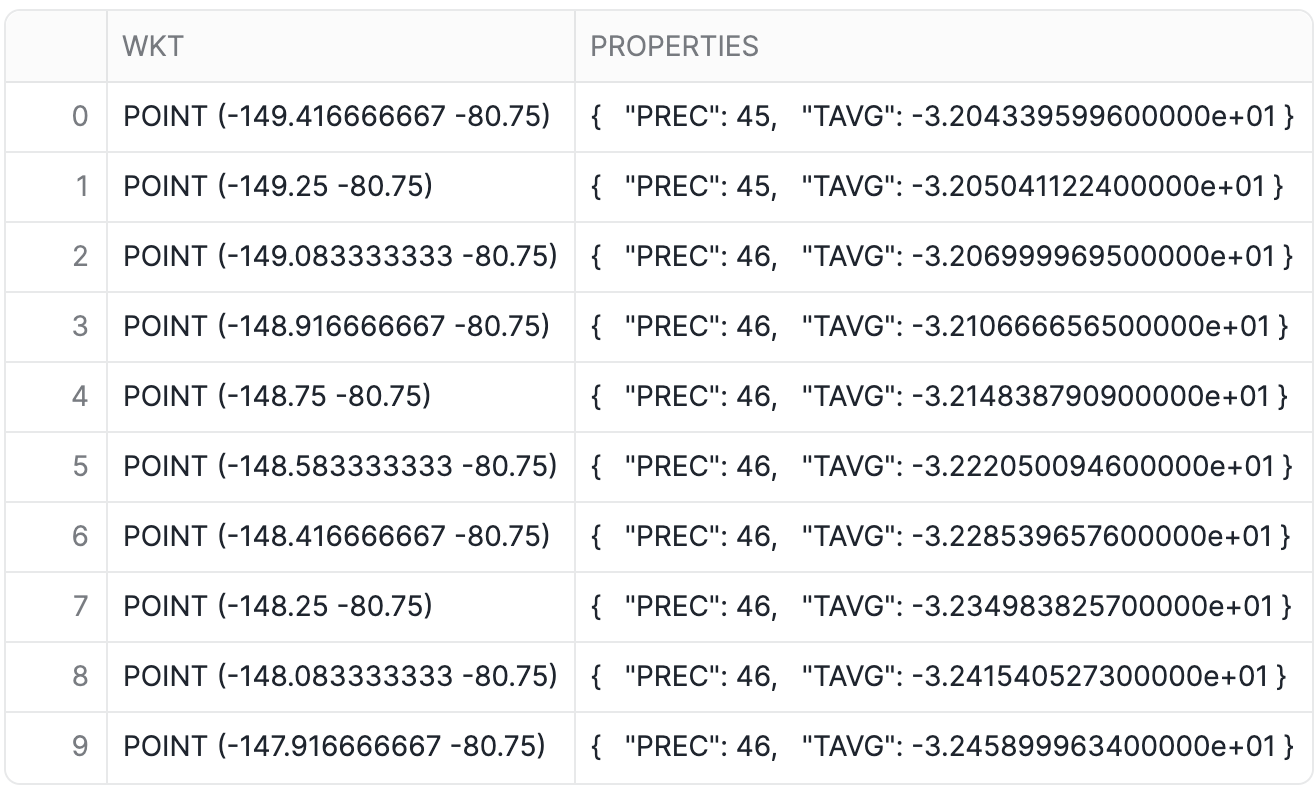

Step 2. Data Preparation

To showcase geocoding techniques in this lab, and to evaluate the quality of our approach you will use a table CARTO_ACADEMY__DATA_FOR_TUTORIALS.CARTO.DATAAPPEAL_RESTAURANTS_AND_CAFES_BERLIN_CPG with locations of restaurants and cafes in Berlin. If you look into that table you will notice that some records don't have full or correct information in the STREET_ADDRESS column. To be able to calculate the correct quality metrics in this lab lets do a simple cleanup of the low quality datapoint. Run the following query to create a table that has only records that have 5-digits postcode and those records are in Berlin.

CREATE OR REPLACE TABLE ADVANCED_ANALYTICS.PUBLIC.GEOCODING_ADDRESSES AS

SELECT *

FROM CARTO_ACADEMY__DATA_FOR_TUTORIALS.CARTO.DATAAPPEAL_RESTAURANTS_AND_CAFES_BERLIN_CPG

WHERE REGEXP_SUBSTR(street_address, '(\\d{5})') is not null

AND city ILIKE 'berlin';

If you check the size of ADVANCED_ANALYTICS.PUBLIC.GEOCODING_ADDRESSES table you'll see that it has about 10K rows.

The Worldwide Address Data dataset contains more than 500M addresses around the world and we will use it for geocoding and reverse geocoding. However some addresses in that dataset contain addresses with coordinates outside of the allowed boundaries for latitude and longitude. Run the following query to create a new table that filters out those "invalid" records and includes a new column, LOCATION, which stores the locations in the GEOGRAPHY type:

CREATE OR REPLACE TABLE ADVANCED_ANALYTICS.PUBLIC.OPENADDRESS AS

SELECT ST_POINT(lon, lat) as location, *

FROM WORLDWIDE_ADDRESS_DATA.ADDRESS.OPENADDRESS

WHERE lon between -180 and 180

AND lat between -90 and 90;

Now when all your data is ready and clean, you can proceed to the actual use cases.

Step 2. Data Cleansing

Customer-provided address data is often incomplete or contains spelling mistakes. If you plan to perform geocoding on that data, it would be a good idea to include address cleansing as a preparation step.

In this step, you will prepare a prompt to run the data cleansing. For this task, you will use the CORTEX.COMPLETE() function because it is purpose-built for data processing and data generation tasks. First, let's create a Cortex role. In the query below, replace AA with the username you used to log in to Snowflake.

CREATE ROLE IF NOT EXISTS cortex_user_role;

GRANT DATABASE ROLE SNOWFLAKE.CORTEX_USER TO ROLE cortex_user_role;

GRANT ROLE cortex_user_role TO USER AA;

You are now ready to provide the CORTEX.COMPLETE() function with instructions on how to perform address cleansing. Specifically, using a table of Berlin restaurants, you'll create a new table with an additional column parsed_address, which is the result of the CORTEX.COMPLETE() function. For complex processing like this, you will use mistral-8x7b, a very capable open-source LLM created by Mistral AI. Essentially, we want to parse the address stored as a single string into a JSON object that contains each component of the address as a separate key.

As a general rule when writing a prompt, the instructions should be simple, clear, and complete. For example, you should clearly define the task as parsing an address into a JSON object. It's important to define the constraints of the desired output; otherwise, the LLM may produce unexpected results. Below, you specifically instruct the LLM to parse the address stored as text and explicitly tell it to respond in JSON format.

CREATE OR REPLACE TABLE ADVANCED_ANALYTICS.PUBLIC.GEOCODING_CLEANSED_ADDRESSES as

SELECT geom, geoid, street_address, name,

snowflake.cortex.complete('mixtral-8x7b',

concat('Task: Your job is to return a JSON formatted response that normalizes, standardizes, and enriches the following address,

filling in any missing information when needed: ', street_address,

'Requirements: Return only in valid JSON format (starting with { and ending with }).

The JSON response should include the following fields:

"number": <<house_number>>,

"street": <<street_name>>,

"city": <<city_name>>,

"postcode": <<postcode_value>>,

"country": <<ISO_3166-1_alpha-2_country_code>>.

Values inside <<>> must be replaced with the corresponding details from the address provided.

- If a value cannot be determined, use "Null".

- No additional fields or classifications should be included beyond the five categories listed.

- Country code must follow the ISO 3166-1 alpha-2 standard.

- Do not add comments or any other non-JSON text.

- Use Latin characters for street names and cities, avoiding Unicode alternatives.

Examples:

Input: "123 Mn Stret, San Franscico, CA"

Output: {"number": "123", "street": "Main Street", "city": "San Francisco", "postcode": "94105", "country": "US"}

Input: "45d Park Avnue, New Yrok, NY 10016"

Output: {"number": "45d", "street": "Park Avenue", "city": "New York", "postcode": "10016", "country": "US"}

Input: "10 Downig Stret, Londn, SW1A 2AA, United Knidom"

Output: {"number": "10", "street": "Downing Street", "city": "London", "postcode": "SW1A 2AA", "country": "UK"}

Input: "4 Avneu des Champs Elyses, Paris, France"

Output: {"number": "4", "street": "Avenue des Champs-Élysées", "city": "Paris", "postcode": "75008", "country": "FR"}

Input: "1600 Amiphiteatro Parkway, Montain View, CA 94043, USA"

Output: {"number": "1600", "street": "Amphitheatre Parkway", "city": "Mountain View", "postcode": "94043", "country": "US"}

Input: "Plaza de Espana, 28c, Madird, Spain"

Output: {"number": "28c", "street": "Plaza de España", "city": "Madrid", "postcode": "28008", "country": "ES"}

Input: "1d Prinzessinenstrase, Berlín, 10969, Germany"

Output: {"number": "1d", "street": "Prinzessinnenstraße", "city": "Berlin", "postcode": "10969", "country": "DE"} ')) as parsed_address

FROM ADVANCED_ANALYTICS.PUBLIC.GEOCODING_ADDRESSES;

On a LARGE warehouse, which we used in this quickstart, the query completed in about 13 minutes. However, on a smaller warehouse, the completion time is roughly the same. We don't recommend using a warehouse larger than MEDIUM for CORTEX LLM functions, as it won't significantly reduce execution time. If you plan to execute complex processing with LLM on a large dataset, it's better to split the dataset into chunks up to 100K rows each and run multiple jobs in parallel using an X-Small warehouse. A rule of thumb is that on an X-Small, data cleansing of 1,000 rows can be done within 90 seconds, which costs about 5 cents.

Now, you will convert the parsed address into JSON type:

CREATE OR REPLACE TABLE ADVANCED_ANALYTICS.PUBLIC.GEOCODING_CLEANSED_ADDRESSES AS

SELECT geoid, geom, street_address, name,

TRY_PARSE_JSON(parsed_address) AS parsed_address,

FROM ADVANCED_ANALYTICS.PUBLIC.GEOCODING_CLEANSED_ADDRESSES;

Run the following query to check what the result of cleansing looks like in the PARSED_ADDRESS column and compare it with the actual address in the STREET_ADDRESS column.

ALTER SESSION SET GEOGRAPHY_OUTPUT_FORMAT='WKT';

SELECT TOP 10 * FROM ADVANCED_ANALYTICS.PUBLIC.GEOCODING_CLEANSED_ADDRESSES;

You also can notice that 23 addresses were not correctly parsed, but if you look into the STREET_ADDRESS column of those records using the following query, you can understand why they were not parsed: in most cases there are some address elements missing in the initial address.

SELECT * FROM ADVANCED_ANALYTICS.PUBLIC.GEOCODING_CLEANSED_ADDRESSES

WHERE parsed_address IS NULL;

Step3. Geocoding

In this step, you will use the Worldwide Address Data to perform geocoding. You will join this dataset with your cleansed address data using country, city, postal code, street, and building number as keys. For street name comparison, you will use Jaro-Winkler distance to measure similarity between the two strings. You should use a sufficiently high similarity threshold but not 100%, which would imply exact matches. Approximate similarity is necessary to account for potential variations in street names, such as "Street" versus "Straße".

To the initial table with actual location and address, you will add columns with geocoded and parsed values for country, city, postcode, street, and building number. Run the following query:

CREATE OR REPLACE TABLE ADVANCED_ANALYTICS.PUBLIC.GEOCODED AS

SELECT

t1.name,

t1.geom AS actual_location,

t2.location AS geocoded_location,

t1.street_address as actual_address,

t2.street as geocoded_street,

t2.postcode as geocoded_postcode,

t2.number as geocoded_number,

t2.city as geocoded_city

FROM ADVANCED_ANALYTICS.PUBLIC.GEOCODING_CLEANSED_ADDRESSES t1

LEFT JOIN ADVANCED_ANALYTICS.PUBLIC.OPENADDRESS t2

ON t1.parsed_address:postcode::string = t2.postcode

AND t1.parsed_address:number::string = t2.number

AND LOWER(t1.parsed_address:country::string) = LOWER(t2.country)

AND LOWER(t1.parsed_address:city::string) = LOWER(t2.city)

AND JAROWINKLER_SIMILARITY(LOWER(t1.parsed_address:street::string), LOWER(t2.street)) > 95;

Now let's analyze the results of geocoding and compare the locations we obtained after geocoding with the original addresses. First, let's see how many addresses we were not able to geocode using this approach.

SELECT count(*) FROM ADVANCED_ANALYTICS.PUBLIC.GEOCODED

WHERE geocoded_location IS NULL;

It turned out that 2,081 addresses were not geocoded, which is around 21% of the whole dataset. Let's see how many geocoded addresses deviate from the original location by more than 200 meters.

SELECT COUNT(*) FROM ADVANCED_ANALYTICS.PUBLIC.GEOCODED

WHERE ST_DISTANCE(actual_location, geocoded_location) > 200;

It seems there are 174 addresses. Let's examine random records from these 174 addresses individually by running the query below. You can visualize coordinates from the table with results using this service (copy-paste GEOCODED_LOCATION and ACTUAL_LOCATION values).

SELECT * FROM ADVANCED_ANALYTICS.PUBLIC.GEOCODED

WHERE ST_DISTANCE(actual_location, geocoded_location) > 200;

You can see that in many cases, our geocoding provided the correct location for the given address, while the original location point actually corresponds to a different address. Therefore, our approach returned more accurate locations than those in the original dataset. Sometimes, the "ground truth" data contains incorrect data points.

In this exercise, you successfully geocoded more than 78% of the entire dataset. To geocode the remaining addresses that were not geocoded using this approach, you can use paid services such as Mapbox or TravelTime. However, you managed to reduce the geocoding cost by more than four times compared to what it would have been if you had used those services for the entire dataset.

Step 4. Reverse Geocoding

In the next step, we will do the opposite - for a given location, we will get the address. Often, companies have location information and need to convert it into the actual address. Similar to the previous example, the best way to do reverse geocoding is to use specialized services, such as Mapbox or TravelTime. However, there are cases where you're ready to trade off between accuracy and cost. For example, if you don't need an exact address but a zip code would be good enough. In this case, you can use free datasets to perform reverse geocoding.

To complete this exercise, we will use the nearest neighbor approach. For locations in our test dataset (ADVANCED_ANALYTICS.PUBLIC.GEOCODING_ADDRESSES table), you will find the closest locations from the Worldwide Address Data. Let's first create a procedure that, for each row in the given table with addresses, finds the closest address from the Worldwide Address Data table within the radius of 5km. To speed up the function we will apply an iterative approach to the neighbor search - start from 10 meters and increase the search radius until a match is found or the maximum radius is reached. Run the following query:

CREATE OR REPLACE PROCEDURE GEOCODING_EXACT(

NAME_FOR_RESULT_TABLE TEXT,

LOCATIONS_TABLE_NAME TEXT,

LOCATIONS_ID_COLUMN_NAME TEXT,

LOCATIONS_COLUMN_NAME TEXT,

WWAD_TABLE_NAME TEXT,

WWAD_COLUMN_NAME TEXT

)

RETURNS TEXT

LANGUAGE SQL

AS $$

DECLARE

-- Initialize the search radius to 10 meters.

RADIUS REAL DEFAULT 10.0;

BEGIN

-- **********************************************************************

-- Procedure: GEOCODING_EXACT

-- Description: This procedure finds the closest point from the Worldwide

-- Address Data table for each location in the LOCATIONS_TABLE.

-- It iteratively increases the search radius until a match is

-- found or the maximum radius is reached.

-- **********************************************************************

-- Create or replace the result table with the required schema but no data.

EXECUTE IMMEDIATE '

CREATE OR REPLACE TABLE ' || NAME_FOR_RESULT_TABLE || ' AS

SELECT

' || LOCATIONS_ID_COLUMN_NAME || ',

' || LOCATIONS_COLUMN_NAME || ' AS LOCATION_POINT,

' || WWAD_COLUMN_NAME || ' AS CLOSEST_LOCATION_POINT,

t2.NUMBER,

t2.STREET,

t2.UNIT,

t2.CITY,

t2.DISTRICT,

t2.REGION,

t2.POSTCODE,

t2.COUNTRY,

0.0::REAL AS DISTANCE

FROM

' || LOCATIONS_TABLE_NAME || ' t1,

' || WWAD_TABLE_NAME || ' t2

LIMIT 0';

-- Define a sub-query to select locations not yet processed.

LET REMAINING_QUERY := '

SELECT

' || LOCATIONS_ID_COLUMN_NAME || ',

' || LOCATIONS_COLUMN_NAME || '

FROM

' || LOCATIONS_TABLE_NAME || '

WHERE

NOT EXISTS (

SELECT 1

FROM ' || NAME_FOR_RESULT_TABLE || ' tmp

WHERE ' || LOCATIONS_TABLE_NAME || '.' || LOCATIONS_ID_COLUMN_NAME || ' = tmp.' || LOCATIONS_ID_COLUMN_NAME || '

)';

-- Iteratively search for the closest point within increasing radius.

FOR I IN 1 TO 10 DO

-- Insert closest points into the result table for

-- locations within the current radius.

EXECUTE IMMEDIATE '

INSERT INTO ' || NAME_FOR_RESULT_TABLE || '

WITH REMAINING AS (' || :REMAINING_QUERY || ')

SELECT

' || LOCATIONS_ID_COLUMN_NAME || ',

' || LOCATIONS_COLUMN_NAME || ' AS LOCATION_POINT,

points.' || WWAD_COLUMN_NAME || ' AS CLOSEST_LOCATION_POINT,

points.NUMBER,

points.STREET,

points.UNIT,

points.CITY,

points.DISTRICT,

points.REGION,

points.POSTCODE,

points.COUNTRY,

ST_DISTANCE(' || LOCATIONS_COLUMN_NAME || ', points.' || WWAD_COLUMN_NAME || ') AS DISTANCE

FROM

REMAINING

JOIN

' || WWAD_TABLE_NAME || ' points

ON

ST_DWITHIN(

REMAINING.' || LOCATIONS_COLUMN_NAME || ',

points.' || WWAD_COLUMN_NAME || ',

' || RADIUS || '

)

QUALIFY

ROW_NUMBER() OVER (

PARTITION BY ' || LOCATIONS_ID_COLUMN_NAME || '

ORDER BY DISTANCE

) <= 1';

-- Double the radius for the next iteration.

RADIUS := RADIUS * 2;

END FOR;

END

$$;

Run the next query to call that procedure and store results of reverse geocoding to ADVANCED_ANALYTICS.PUBLIC.REVERSE_GEOCODED table:

CALL GEOCODING_EXACT('ADVANCED_ANALYTICS.PUBLIC.REVERSE_GEOCODED', 'ADVANCED_ANALYTICS.PUBLIC.GEOCODING_ADDRESSES', 'GEOID', 'GEOM', 'ADVANCED_ANALYTICS.PUBLIC.OPENADDRESS', 'LOCATION');

This query completed in 5.5 minutes on LARGE warehouse, which corresponds to about 2 USD. Let's now compare the address we get after the reverse geocoding (ADVANCED_ANALYTICS.PUBLIC.REVERSE_GEOCODED table) with the table that has the original address.

SELECT t1.geoid,

t2.street_address AS actual_address,

t1.street || ' ' || t1.number || ', ' || t1.postcode || ' ' || t1.city || ', ' || t1.country AS geocoded_address

FROM ADVANCED_ANALYTICS.PUBLIC.REVERSE_GEOCODED t1

INNER JOIN ADVANCED_ANALYTICS.PUBLIC.GEOCODING_CLEANSED_ADDRESSES t2

ON t1.geoid = t2.geoid

WHERE t1.distance < 100;

For 9830 records, the closest addresses we found are within 100 meters from the original address. This corresponds to 98.7% of cases. As we mentioned earlier, often for analysis you might not need the full address, and knowing a postcode is already good enough. Run the following query to see for how many records the geocoded postcode is the same as the original postcode:

SELECT count(*)

FROM ADVANCED_ANALYTICS.PUBLIC.REVERSE_GEOCODED t1

INNER JOIN ADVANCED_ANALYTICS.PUBLIC.GEOCODING_CLEANSED_ADDRESSES t2

ON t1.geoid = t2.geoid

WHERE t2.parsed_address:postcode::string = t1.postcode::string;

This query returned 9564 records, about 96% of the dataset, which is quite a good result.

Out of curiosity, let's see, for how many addresses the geocoded and initial address is the same up until the street name. Run the following query:

SELECT count(*)

FROM ADVANCED_ANALYTICS.PUBLIC.REVERSE_GEOCODED t1

INNER JOIN ADVANCED_ANALYTICS.PUBLIC.GEOCODING_CLEANSED_ADDRESSES t2

ON t1.geoid = t2.geoid

WHERE t2.parsed_address:postcode::string = t1.postcode

AND LOWER(t2.parsed_address:country::string) = LOWER(t1.country)

AND LOWER(t2.parsed_address:city::string) = LOWER(t1.city)

AND JAROWINKLER_SIMILARITY(LOWER(t2.parsed_address:street::string), LOWER(t1.street)) > 95;

82% of addresses correctly geocoded up to the street name. And to have a full picture, let's see how many records have the fully identical original and geocoded address:

SELECT count(*)

FROM ADVANCED_ANALYTICS.PUBLIC.REVERSE_GEOCODED t1

INNER JOIN ADVANCED_ANALYTICS.PUBLIC.GEOCODING_CLEANSED_ADDRESSES t2

ON t1.geoid = t2.geoid

WHERE t2.parsed_address:postcode::string = t1.postcode

AND t2.parsed_address:number::string = t1.number

AND LOWER(t2.parsed_address:country::string) = LOWER(t1.country)

AND LOWER(t2.parsed_address:city::string) = LOWER(t1.city)

AND JAROWINKLER_SIMILARITY(LOWER(t2.parsed_address:street::string), LOWER(t1.street)) > 95;

For 61% of addresses we were able to do reverse geocoding that matches reference dataset up to the rooftop.

Conclusion

In this lab, you have learned how to perform geocoding and reverse geocoding using free datasets and open-source tools. While this approach may not provide the highest possible accuracy, it offers a cost-effective solution for processing large datasets where some degree of inaccuracy is acceptable. It's important to mention that Worldwide Address Data that has more than 500M addresses for the whole world is one of many free datasets that you can get from Snowflake Marketplace and use for geocoding use cases. There are others, which you might consider for your use cases, here are just some examples:

- Overture Maps - Addresses - if you mainly need to geocode addresses in North America, another good option would be to use this dataset that has more than 200M addresses.

- US Addresses & PO - has more than 150M rows can be used as a source of information around locations of Points of Interests.

- French National Addresses - contains about 26M addresses in France.

- Dutch Addresses & Buildings Registration (BAG) - includes Dutch Addresses.

There is a high chance that datasets focused on particular counties have richer and more accurate data for those countries. And by amending queries from this lab you can find the best option for your needs.

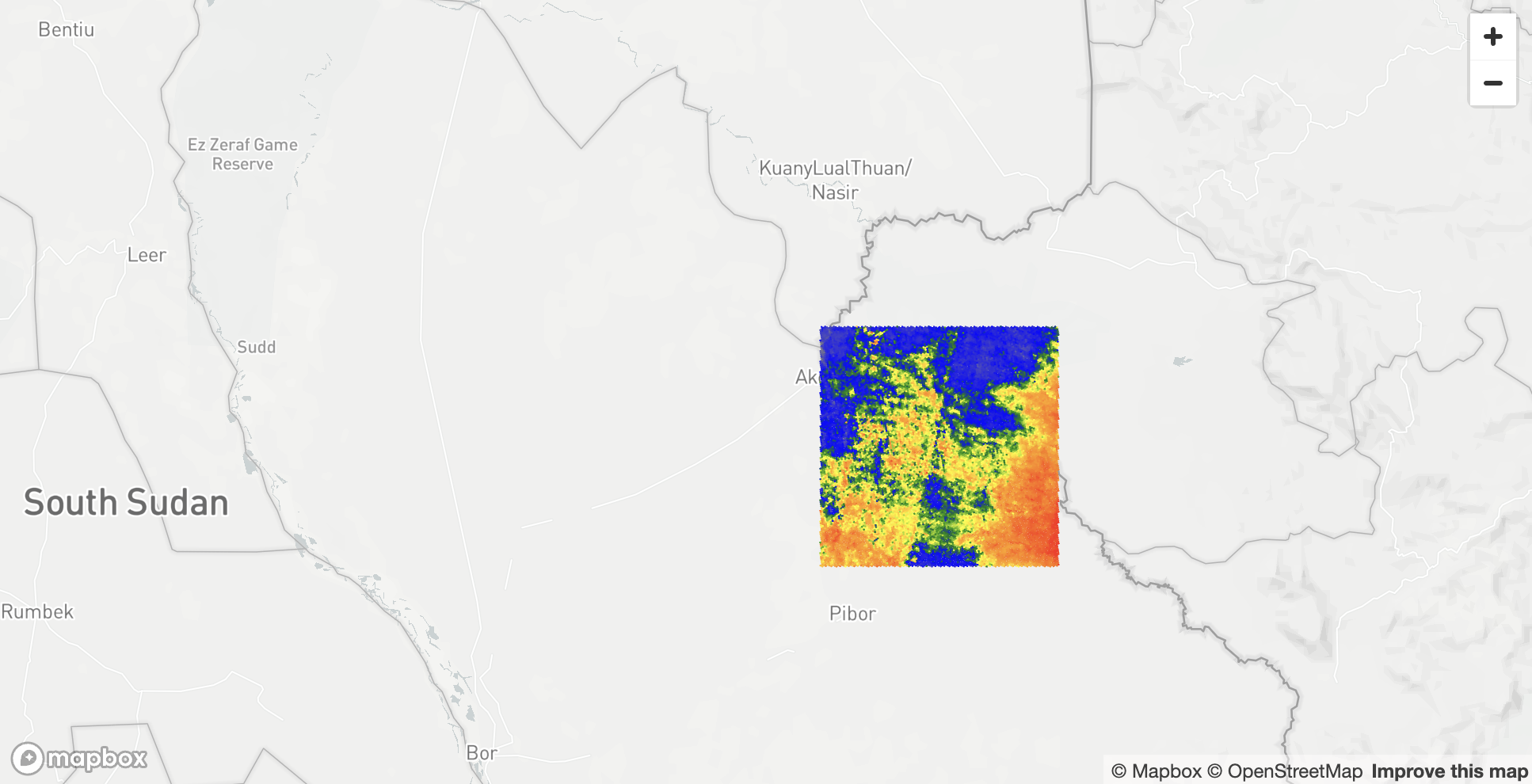

In this lab, we aim to show you how to predict the number of trips in the coming hours in each area of New York. To accomplish this, you will ingest the raw data and then aggregate it by hour and region. For simplicity, you will use Discrete Global Grid H3. The result will be an hourly time series, each representing the count of trips originating from distinct areas. Before running prediction and visualizing results, you will enrich data with third-party signals, such as information about holidays and offline sports events.

In this lab you will learn how to:

- Work with geospatial data

- Enrich data with new features

- Predict time-series of complex structure

This approach is not unique to trip forecasting but is equally applicable in various scenarios where predictive analysis is required. Examples include forecasting scooter or bike pickups, food delivery orders, sales across multiple retail outlets, or predicting the volume of cash withdrawals across an ATM network. Such models are invaluable for planning and optimization across various industries and services.

Step 1. Data acquisition

The New York Taxi and Limousine Commission (TLC) has provided detailed, anonymized customer travel data since 2009. Painted yellow cars can pick up passengers in any of the city's five boroughs. Raw data on yellow taxi rides can be found on the TLC website. This data is divided into files by month. Each file contains detailed trip information, you can read about it here. For our project, you will use an NY Taxi dataset for the 2014-2015 years from the CARTO Academy Marketplace listing.

- Navigate to the

Marketplacescreen using the menu on the left side of the window - Search for

CARTO Academyin the search bar - Find and click the

CARTO Academy - Data for tutorialstile - Once in the listing, click the big blue

Getbutton

Another dataset you will use is events data and you can also get it from the Snowflake Marketplace. It is provided by PredictHQ and called PredictHQ Quickstart Demo.

- Search for

PredictHQ Quickstart Demoin the search bar - Find and click the

Quickstart Demotile

- On the

Get Datascreen clickGet.

Congratulations! You have just created a shared database from a listing on the Snowflake Marketplace.

Step 2. Data transformation

In this step, you'll divide New York into uniformly sized regions and assign each taxi pick-up location to one of these regions. We aim to get a table with the number of taxi trips per hour for each region.

To achieve this division, you will use the Discrete Global Grid H3. H3 organizes the world into a grid of equal-sized hexagonal cells, with each cell identified by a unique code (either a string or an integer). This hierarchical grid system allows cells to be combined into larger cells or subdivided into smaller ones, facilitating efficient geospatial data processing.

H3 offers 16 different resolutions for dividing areas into hexagons, ranging from resolution 0, where the world is segmented into 122 large hexagons, to resolution 15. At this resolution, each hexagon is less than a square meter, covering the world with approximately 600 trillion hexagons. You can read more about resolutions here. For our task, we will use resolution 8, where the size of each hexagon is about 0.7 sq. km (0.3 sq. miles).

As a source of the trips data you will use TLC_YELLOW_TRIPS_2014 and TLC_YELLOW_TRIPS_2015 tables from the CARTO Academy listing. We are interested in the following fields:

- Pickup Time

- Dropoff Time

- Pickup Latitude

- Pickup Longitude

- Dropoff Latitude

- Dropoff Longitude

First, specify the default Database, Schema and the Warehouse:

USE ADVANCED_ANALYTICS.PUBLIC;

USE WAREHOUSE my_wh;

Since CARTO's tables contain raw data you might want to clean it before storing. In the following query you will do a few data cleaning steps:

- Remove rows that are outside of latitude/longitude allowed values

- Keep only trips with a duration longer than one minute and distances more than 10 meters.

And since you are interested in trip data for 2014 and 2015 you need to union TLC_YELLOW_TRIPS_2014 and TLC_YELLOW_TRIPS_2015 tables. On average, the execution time on the LARGE warehouse is under 4 minutes.

CREATE OR REPLACE TABLE ADVANCED_ANALYTICS.PUBLIC.ny_taxi_rides AS

SELECT CONVERT_TIMEZONE('UTC', 'America/New_York', to_timestamp(PICKUP_DATETIME::varchar)) PICKUP_TIME,

CONVERT_TIMEZONE('UTC', 'America/New_York', to_timestamp(DROPOFF_DATETIME::varchar)) DROPOFF_TIME,

st_point(PICKUP_LONGITUDE, PICKUP_LATITUDE) AS PICKUP_LOCATION,

st_point(DROPOFF_LONGITUDE, DROPOFF_LATITUDE) AS DROPOFF_LOCATION,

FROM CARTO_ACADEMY__DATA_FOR_TUTORIALS.CARTO.TLC_YELLOW_TRIPS_2014

WHERE pickup_latitude BETWEEN -90 AND 90

AND dropoff_latitude BETWEEN -90 AND 90

AND pickup_longitude BETWEEN -180 AND 180

AND dropoff_longitude BETWEEN -180 AND 180

AND st_distance(st_point(PICKUP_LONGITUDE, PICKUP_LATITUDE), st_point(DROPOFF_LONGITUDE, DROPOFF_LATITUDE)) > 10

AND TIMEDIFF(MINUTE, PICKUP_TIME, DROPOFF_TIME) > 1

UNION ALL

SELECT CONVERT_TIMEZONE('UTC', 'America/New_York', to_timestamp(PICKUP_DATETIME::varchar)) PICKUP_TIME,

CONVERT_TIMEZONE('UTC', 'America/New_York', to_timestamp(DROPOFF_DATETIME::varchar)) DROPOFF_TIME,

st_point(PICKUP_LONGITUDE, PICKUP_LATITUDE) AS PICKUP_LOCATION,

st_point(DROPOFF_LONGITUDE, DROPOFF_LATITUDE) AS DROPOFF_LOCATION,

FROM CARTO_ACADEMY__DATA_FOR_TUTORIALS.CARTO.TLC_YELLOW_TRIPS_2015

WHERE pickup_latitude BETWEEN -90 AND 90

AND dropoff_latitude BETWEEN -90 AND 90

AND pickup_longitude BETWEEN -180 AND 180

AND dropoff_longitude BETWEEN -180 AND 180

AND st_distance(PICKUP_LOCATION, DROPOFF_LOCATION) > 10

AND TIMEDIFF(MINUTE, PICKUP_TIME, DROPOFF_TIME) > 1;

Now you will create a table where, for each pair of timestamp/H3, we calculate the number of trips. You will strip off minutes and seconds and keep only hours.

CREATE OR REPLACE TABLE ADVANCED_ANALYTICS.PUBLIC.NY_TAXI_RIDES_H3 AS

SELECT TIME_SLICE(pickup_time, 60, 'minute', 'START') AS pickup_time,

H3_POINT_TO_CELL_string(pickup_location, 8) AS h3,

count(*) AS pickups

FROM ADVANCED_ANALYTICS.PUBLIC.ny_taxi_rides

GROUP BY 1, 2;

Since on resolution 8, you might have more than 1000 hexagons for New York, to speed up the training process, you will keep only hexagons that had more than 1M pickups in 2014. This is shown in the following code block.

CREATE OR REPLACE TABLE ADVANCED_ANALYTICS.PUBLIC.NY_TAXI_RIDES_H3

AS WITH all_hexagons AS

(SELECT h3,

SUM(pickups) AS total_pickups

FROM ADVANCED_ANALYTICS.PUBLIC.NY_TAXI_RIDES_H3

WHERE year(pickup_time) = 2014

GROUP BY 1)

SELECT t1.*

FROM ADVANCED_ANALYTICS.PUBLIC.NY_TAXI_RIDES_H3 t1

INNER JOIN all_hexagons t2 ON t1.h3 = t2.h3

WHERE total_pickups >= 1000000;

It's important to remember that if the raw data lacks records for a specific hour and area combination, the aggregated data for that period should be marked as 0. This step is crucial for accurate time series prediction. Run the following query to add records indicating that there were zero trips for any H3 location and timestamp pair without recorded trips.

CREATE OR REPLACE TABLE ADVANCED_ANALYTICS.PUBLIC.NY_TAXI_RIDES_H3 AS

WITH all_dates_hexagons AS (

SELECT DATEADD(HOUR, VALUE::int, '2014-01-01'::timestamp) AS pickup_time, h3

FROM TABLE(FLATTEN(ARRAY_GENERATE_RANGE(0, DATEDIFF('hour', '2014-01-01', '2015-12-31 23:59:00') + 1)))

CROSS JOIN (SELECT DISTINCT h3 FROM ADVANCED_ANALYTICS.PUBLIC.NY_TAXI_RIDES_H3)

)

SELECT t1.pickup_time,

t1.h3, IFF(t2.pickups IS NOT NULL, t2.pickups, 0) AS pickups

FROM all_dates_hexagons t1

LEFT JOIN ADVANCED_ANALYTICS.PUBLIC.NY_TAXI_RIDES_H3 t2

ON t1.pickup_time = t2.pickup_time AND t1.h3 = t2.h3;

Step 4. Data Enrichment

In this step, you will enhance our dataset with extra features that could improve the accuracy of our predictions. Cortex model for time series automatically encodes days of the week as a separate feature, but it makes sense to consider that public or school holidays could affect the demand for taxi services. Likewise, areas hosting sporting events might experience a surge in taxi pickups. To incorporate this insight, you will use data from PredictHQ - Quickstart Demo listing, which provides information on events in New York for the years 2014-2015.

Run the following query to enrich the data with holiday, and event information. For sports events, you will include only those with a high rank.

CREATE OR REPLACE TABLE ADVANCED_ANALYTICS.PUBLIC.NY_TAXI_RIDES_H3 AS

SELECT t1.*,

IFF(t2.category = 'school-holidays', 'school-holidays', 'None') AS school_holiday,

IFF(t3.category = 'public-holidays', ARRAY_TO_STRING(t3.labels, ', '), 'None') AS public_holiday,

IFF(t4.category = 'sports', t4.labels[0]::string, 'None') AS sport_event

FROM ADVANCED_ANALYTICS.PUBLIC.NY_TAXI_RIDES_H3 t1

LEFT JOIN (SELECT distinct title, category, event_start, event_end, labels

FROM QUICKSTART_DEMO.PREDICTHQ.PREDICTHQ_EVENTS_SNOWFLAKE_SUMMIT_2024

WHERE category = 'school-holidays' and title ilike 'New York%') t2

ON DATE(t1.pickup_time) between t2.event_start AND t2.event_end

LEFT JOIN (SELECT distinct title, category, event_start, event_end, labels

FROM QUICKSTART_DEMO.PREDICTHQ.PREDICTHQ_EVENTS_SNOWFLAKE_SUMMIT_2024

WHERE array_contains('holiday-national'::variant, labels)) t3

ON DATE(t1.pickup_time) between t3.event_start AND t3.event_end

LEFT JOIN (SELECT * from QUICKSTART_DEMO.PREDICTHQ.PREDICTHQ_EVENTS_SNOWFLAKE_SUMMIT_2024

WHERE phq_rank > 70 and category = 'sports') t4

ON t1.pickup_time = date_trunc('hour', t4.event_start)

AND t1.h3 = h3_point_to_cell_string(t4.geo, 8);

Step 5. Training and Prediction

In this step, you'll divide our dataset into two parts: the Training set and the Prediction set. The Training set will be used to train our machine learning model. It will include data from the entirety of 2014 and part of 2015, going up to June 5th, 2015. Run the following query to create the Training set:

CREATE OR REPLACE TABLE ADVANCED_ANALYTICS.PUBLIC.NY_TAXI_RIDES_H3_TRAIN AS

SELECT *

FROM ADVANCED_ANALYTICS.PUBLIC.NY_TAXI_RIDES_H3

WHERE date(pickup_time) < date('2015-06-05 12:00:00');

The prediction set, on the other hand, will contain data for one week starting June 5th, 2015. This setup allows us to make predictions on data that wasn't used during training.

CREATE OR REPLACE TABLE ADVANCED_ANALYTICS.PUBLIC.NY_TAXI_RIDES_H3_PREDICT AS

SELECT h3,

pickup_time,

SCHOOL_HOLIDAY,

PUBLIC_HOLIDAY,

SPORT_EVENT

FROM ADVANCED_ANALYTICS.PUBLIC.NY_TAXI_RIDES_H3

WHERE date(pickup_time) >= date('2015-06-05')

AND date(pickup_time) < date('2015-06-12');

Now that you have the Training and Prediction sets, you can run your model training step. In this step, you will use Snowflake's Cortex ML Forecasting function to train your ny_taxi_rides_model. You're telling the function it should train on ny_taxi_rides_h3_train – and that this table contains data for multiple distinct time series (series_colname => ‘h3'), one for each h3 in the table. The function will now automatically train one machine learning model for each h3. Note that you are also telling the model which column in our table to use as a timestamp and which column to treat as our "target" (i.e., the column you want to forecast). On average the query below completes in about 7 minutes on the LARGE warehouse.

CREATE OR REPLACE snowflake.ml.forecast ny_taxi_rides_model(

input_data => system$reference('table', 'ADVANCED_ANALYTICS.PUBLIC.NY_TAXI_RIDES_H3_TRAIN'),

series_colname => 'h3',

timestamp_colname => 'pickup_time',

target_colname => 'pickups');

Now you will predict the "future" demand for one week of test data. Run the following command to forecast demand for each H3 cell ID and store your results in the "forecasts" table.

Similar to what you did in the training step, you specify the data the model should use to generate its forecasts (ny_taxi_rides_h3_predict) and indicate which columns to use for identifying unique H3 and for timestamps.

BEGIN

CALL ny_taxi_rides_model!FORECAST(

INPUT_DATA => SYSTEM$REFERENCE('TABLE', 'ADVANCED_ANALYTICS.PUBLIC.NY_TAXI_RIDES_H3_PREDICT'),

SERIES_COLNAME => 'h3',

TIMESTAMP_COLNAME => 'pickup_time',

CONFIG_OBJECT => {'prediction_interval': 0.95}

);

-- These steps store your predictions to a table.

LET x := SQLID;

CREATE OR REPLACE TABLE ADVANCED_ANALYTICS.PUBLIC.ny_taxi_rides_model_forecast AS

SELECT series::string as h3,

ts AS pickup_time,

-- If any forecasts or prediction intervals are negative you need to convert them to zero.

CASE WHEN forecast < 0 THEN 0 ELSE forecast END AS forecast,

CASE WHEN lower_bound < 0 THEN 0 ELSE lower_bound END AS lower_bound,

CASE WHEN upper_bound < 0 THEN 0 ELSE upper_bound END AS upper_bound

FROM TABLE(RESULT_SCAN(:x));

END;

Create a table with predicted and actual results:

CREATE OR REPLACE TABLE ADVANCED_ANALYTICS.PUBLIC.ny_taxi_rides_compare AS

SELECT t1.h3,

t1.pickup_time,

t2.pickups,

round(t1.forecast, 0) as forecast

FROM ADVANCED_ANALYTICS.PUBLIC.ny_taxi_rides_model_forecast t1

INNER JOIN ADVANCED_ANALYTICS.PUBLIC.NY_TAXI_RIDES_H3 t2

ON t1.h3 = t2.h3

AND t1.pickup_time = t2.pickup_time;

Now you will generate evaluation metrics and store them in the ny_taxi_rides_metrics table:

BEGIN

CALL ny_taxi_rides_model!show_evaluation_metrics();

LET x := SQLID;

CREATE OR REPLACE TABLE ADVANCED_ANALYTICS.PUBLIC.ny_taxi_rides_metrics AS

SELECT series::string as h3,

metric_value,

error_metric

FROM TABLE(RESULT_SCAN(:x));

END;

The table ny_taxi_rides_metrics contains various metrics; please review what is available in the table. You should select a metric that allows uniform comparisons across all hexagons to understand the model's performance in each hexagon. Since trip volumes may vary among hexagons, the chosen metric should not be sensitive to absolute values. The Symmetric Mean Absolute Percentage Error (SMAPE) would be a suitable choice. Create a table with the list of hexagons and the SMAPE value for each:

CREATE OR REPLACE TABLE ADVANCED_ANALYTICS.PUBLIC.ny_taxi_rides_metrics AS

SELECT h3, metric_value AS smape

FROM ADVANCED_ANALYTICS.PUBLIC.ny_taxi_rides_metrics

WHERE error_metric::string = 'SMAPE'

order by 2 asc;

Step 6. Visualization and analysis

In this step, you will visualize the actual and predicted results and think on how you can improve our model. Open Projects > Streamlit > + Streamlit App. Give the new app a name, for example Demand Prediction - model analysis, and pick ADVANCED_ANALYTICS.PUBLIC as an app location.

Click on the packages tab and add pydeck, branca and plotly to the list of packages as our app will be using them.

Then copy-paste the following code to the editor and click Run:

import branca.colormap as cm

import datetime

import pandas as pd

import plotly.express as px

import pydeck as pdk

import streamlit as st

from snowflake.snowpark.context import get_active_session

@st.cache_data

def get_dataframe_from_raw_sql(query: str) -> pd.DataFrame:

session = get_active_session()

pandas_df = session.sql(query).to_pandas()

return pandas_df

def pydeck_chart_creation(

chart_df: pd.DataFrame,

coordinates: tuple = (40.742, -73.984),

elevation_3d: bool = False,

):

highest_count_df = 0 if chart_df is None else chart_df["COUNT"].max()

st.image('https://sfquickstarts.s3.us-west-1.amazonaws.com/hol_geo_spatial_ml_using_snowflake_cortex/gradient.png')

st.pydeck_chart(

pdk.Deck(

map_style=None,

initial_view_state=pdk.ViewState(

latitude=coordinates[0],

longitude=coordinates[1],

pitch=45,

zoom=10,

),

tooltip={"html": "<b>{H3}:</b> {COUNT}", "style": {"color": "white"}},

layers=[

pdk.Layer(

"H3HexagonLayer",

chart_df,

get_hexagon="H3",

get_fill_color="COLOR",

get_line_color="COLOR",

get_elevation=f"COUNT/{highest_count_df}",

auto_highlight=True,

elevation_scale=10000 if elevation_3d else 0,

pickable=True,

elevation_range=[0, 300],

extruded=True,

coverage=1,

opacity=0.3,

)

],

)

)

def generate_linear_color_map(colors: list, quantiles):

return cm.LinearColormap(

colors,

vmin=quantiles.min(),

vmax=quantiles.max(),

index=quantiles,

)

def render_plotly_line_chart(chart_df: pd.DataFrame):

fig = px.line(

chart_df,

x="PICKUP_TIME",

y=["PICKUPS", "FORECAST"],

color_discrete_sequence=["#D966FF", "#126481"],

markers=True,

)

fig.update_layout(yaxis_title="Pickups", xaxis_title="")

st.plotly_chart(fig, theme="streamlit", use_container_width=True)

st.set_page_config(layout="wide", initial_sidebar_state="expanded")

st.title("NY Pickup Location App :balloon:")

st.write("""An app that visualizes geo-temporal data from NY taxi pickups using H3 and time series.

It can be useful to visualize marketplace signals that are distributed spatially and temporally.""")

AVGLATITUDELONGITUDE = """SELECT

AVG(ST_Y(H3_CELL_TO_POINT(h3))) AS lat,

AVG(ST_X(h3_cell_to_point(h3))) AS lon,

FROM ADVANCED_ANALYTICS.PUBLIC.ny_taxi_rides_compare"""

SQLQUERYTIMESERIES = """SELECT pickup_time, h3, forecast, pickups

FROM ADVANCED_ANALYTICS.PUBLIC.ny_taxi_rides_compare"""

SQLQUERYMETRICS = """SELECT * FROM ADVANCED_ANALYTICS.PUBLIC.ny_taxi_rides_metrics"""

df_avg_lat_long = get_dataframe_from_raw_sql(AVGLATITUDELONGITUDE)

avg_coordinate = (df_avg_lat_long.iloc[0, 0], df_avg_lat_long.iloc[0, 1])

df_metrics = get_dataframe_from_raw_sql(SQLQUERYMETRICS)

with st.sidebar:

initial_start_date = datetime.date(2015, 6, 6)

selected_date_range = st.date_input(

"Date Range:",

(initial_start_date, initial_start_date + datetime.timedelta(days=7)),

format="MM.DD.YYYY",)

tr_col_l, tr_col_r = st.columns(2)

with tr_col_l:

selected_start_time_range = st.time_input(

"Start Time Range",

datetime.time(0, 0),

key="selected_start_time_range",

step=3600,)

with tr_col_r:

selected_end_time_range = st.time_input(

"End Time Range:",

datetime.time(23, 00),

key="selected_end_time_range",

step=3600,)

h3_options = st.selectbox(

"H3 cells to display", (["All"] + df_metrics["H3"].to_list()))

with st.expander(":orange[Expand to see SMAPE metric]"):

df_metrics_filtered = df_metrics

if h3_options != "All":

df_metrics_filtered = df_metrics[df_metrics["H3"] == h3_options]

st.dataframe(df_metrics_filtered, hide_index=True, width=300)

chckbox_3d_value = st.checkbox(

"3D", key="chkbx_forecast", help="Renders H3 Hexagons in 3D")

DF_PICKUPS = None

DF_FORECAST = None

start_end_date_selected = len(selected_date_range) == 2

if start_end_date_selected:

sql_query_pickups = f"""SELECT h3,

SUM(pickups) AS COUNT

FROM ADVANCED_ANALYTICS.PUBLIC.ny_taxi_rides_compare

WHERE pickup_time BETWEEN DATE('{selected_date_range[0]}') AND DATE('{selected_date_range[1]}')

AND TIME(pickup_time) BETWEEN '{selected_start_time_range}' AND '{selected_end_time_range}'

GROUP BY 1"""

sql_query_forecast = f"""SELECT h3,

sum(forecast) AS COUNT

FROM ADVANCED_ANALYTICS.PUBLIC.ny_taxi_rides_compare

WHERE pickup_time BETWEEN DATE('{selected_date_range[0]}') AND DATE('{selected_date_range[1]}')

AND TIME(pickup_time) BETWEEN '{selected_start_time_range}' AND '{selected_end_time_range}'

GROUP BY 1"""

colors_list = ["gray", "blue", "green", "yellow", "orange", "red"]

DF_PICKUPS = get_dataframe_from_raw_sql(sql_query_pickups)

quantiles_pickups = DF_PICKUPS["COUNT"].quantile([0, 0.25, 0.5, 0.75, 1])

color_map_pickups = generate_linear_color_map(colors_list, quantiles_pickups)

DF_PICKUPS["COLOR"] = DF_PICKUPS["COUNT"].apply(color_map_pickups.rgb_bytes_tuple)

DF_FORECAST = get_dataframe_from_raw_sql(sql_query_forecast)

quantiles_forecast = DF_FORECAST["COUNT"].quantile([0, 0.25, 0.5, 0.75, 1])