This quickstart will provide step-by-step instructions for how to build a simple CI/CD pipeline for Snowflake with GitHub Actions and Terraform. My hope is that this will provide you with enough details to get you started on your DevOps journey with Snowflake, GitHub Actions, and Terraform.

DevOps is concerned with automating the development, release and maintenance of software applications. As such, DevOps is very broad and covers the entire Software Development Life Cycle (SDLC). The landscape of software tools used to manage the entire SDLC is complex since there are many different required capabilities/tools, including:

- Requirements management

- Project management (Waterfall, Agile/Scrum)

- Source code management (Version Control)

- Build management (CI/CD)

- Test management (CI/CD)

- Release management (CI/CD)

This quickstart will focus primarily on automated release management for Snowflake by leveraging the GitHub Actions service from GitHub for the CI/CD and Terraform for the Database Change Management. Database Change Management (DCM) refers to a set of processes and tools which are used to manage the objects within a database. It's beyond the scope of this quickstart to provide details around the challenges with and approaches to automating the management of your database objects. If you're interested in more details, please see my blog post Embracing Agile Software Delivery and DevOps with Snowflake.

Let's begin with a brief overview of GitHub and Terraform.

Prerequisites

This quickstart assumes that you have a basic working knowledge of Git repositories.

What You'll Learn

- A brief history and overview of GitHub Actions

- A brief history and overview of Terraform and Terraform Cloud

- How database change management tools like Terraform work

- How a simple release pipeline works

- How to create CI/CD pipelines in GitHub Actions

- Ideas for more advanced CI/CD pipelines with stages

- How to get started with branching strategies

- How to get started with testing strategies

What You'll Need

You will need the following things before beginning:

- Snowflake

- A Snowflake Account.

- A Snowflake User created with appropriate permissions. This user will need permission to create databases.

- GitHub

- A GitHub Account. If you don't already have a GitHub account you can create one for free. Visit the Join GitHub page to get started.

- A GitHub Repository. If you don't already have a repository created, or would like to create a new one, then Create a new respository. For the type, select

Public(although you could use either). And you can skip adding the README, .gitignore and license for now. - Terraform Cloud

- A Terraform Cloud Account. If you don't already have a Terraform Cloud account you can create on for free. Visit the Create an account page to get started.

- Integrated Development Environment (IDE)

- Your favorite IDE with Git integration. If you don't already have a favorite IDE that integrates with Git I would recommend the great, free, open-source Visual Studio Code.

- Your project repository cloned to your computer. For connection details about your Git repository, open the Repository and copy the

HTTPSlink provided near the top of the page. If you have at least one file in your repository then click on the greenCodeicon near the top of the page and copy theHTTPSlink. Use that link in VS Code or your favorite IDE to clone the repo to your computer.

What You'll Build

- A simple, working release pipeline for Snowflake in GitHub Actions using Terraform

GitHub

GitHub provides a complete, end-to-end set of software development tools to manage the SDLC. In particular GitHub provides the following services (from GitHub's Features):

- Collaborative Coding

- Automation & CI/CD

- Security

- Client Apps

- Project Management

- Team Administration

- Community

GitHub Actions

"GitHub Actions makes it easy to automate all your software workflows, now with world-class CI/CD. Build, test, and deploy your code right from GitHub. Make code reviews, branch management, and issue triaging work the way you want" (from GitHub's GitHub Actions). GitHub Actions was first announced in October 2018 and has since become a popular CI/CD tool. To learn more about GitHub Actions, including migrating from other popular CI/CD tools to GitHub Actions check out Learn GitHub Actions.

This quickstart will be focused on the GitHub Actions service.

Terraform

Terraform is an open-source Infrastructure as Code (IaC) tool created by HashiCorp that "allows you to build, change, and version infrastructure safely and efficiently. This includes low-level components such as compute instances, storage, and networking, as well as high-level components such as DNS entries, SaaS features, etc. Terraform can manage both existing service providers and custom in-house solutions." (from Introduction to Terraform). With Terraform the primary way to describe your infrastructure is by creating human-readble, declarative configuration files using the high-level configuration language known as HashiCorp Configuration Language (HCL).

Chan Zuckerberg Provider

While Terraform began as an IaC tool it has expanded and taken on many additional use cases (some listed above). Terraform can be extended to support these different uses cases and systems through a Terraform Provider. There is no official, first-party Terraform-managed Provider for Snowflake, but the Chan Zuckerberg Initiative (CZI) has created a popular Terraform Provider for Snowflake.

Please note that this CZI Provider for Snowflake is a community-developed Provider, not an official Snowflake offering. It comes with no support or warranty.

State Files

Another really important thing to understand about Terraform is how it tracks the state of the resources/objects being managed. Many declarative style tools like this will do a real-time comparison between the objects defined in code and the deployed objects and then figure out what changes are required. But Terraform does not operate in this manner, instead it maintains a State file which keeps track of things. See Terraform's overview of State and in particular their discussion of why they chose to require a State file in Purpose of Terraform State.

State files in Terraform introduce a few challenges, the most significant is that the State file can get out of sync with the actual deployed objects. This will happen if you use a different process/tool than Terraform to update any deployed object (including making manual changes to a deployed object). The State file can also get out of sync (or corrupted) when multiple developers/process are trying to access it at the same time. See Terraform's Remote State page for recommended solutions, including Terraform Cloud which will be discussed next.

Terraform Cloud

"Terraform Cloud is HashiCorp's managed service offering that eliminates the need for unnecessary tooling and documentation to use Terraform in production. Provision infrastructure securely and reliably in the cloud with free remote state storage. As you scale, add workspaces for better collaboration with your team." (from Why Terraform Cloud?)

Some of the key features include (from Why Terraform Cloud?):

- Remote state storage: Store your Terraform state file securely with encryption at rest. Track infrastructure changes over time, and restrict access to certain teams within your organization.

- Flexible Workflows: Run Terraform the way your team prefers. Execute runs from the CLI or a UI, your version control system, or integrate them into your existing workflows with an API.

- Version Control (VCS) integration: Use version control to store and collaborate on Terraform configurations. Terraform Cloud can automate a run as soon as a pull request is merged into a main branch.

- Collaborate on infrastructure changes: Facilitate collaboration on your team. Review and comment on plans prior to executing any change to infrastructure.

As discussed in the Overview section, you will need to have a Terraform Cloud Account for this quickstart. If you don't already have a Terraform Cloud account you can create on for free. Visit the Create an account page to get started. After you create your account you'll be asked to provide an organization name.

Begin by logging in to your Terraform Cloud account. Please note your organization name, we'll need it later. If you've forgotten, you can find your organization name in the top navigation bar and in the URL.

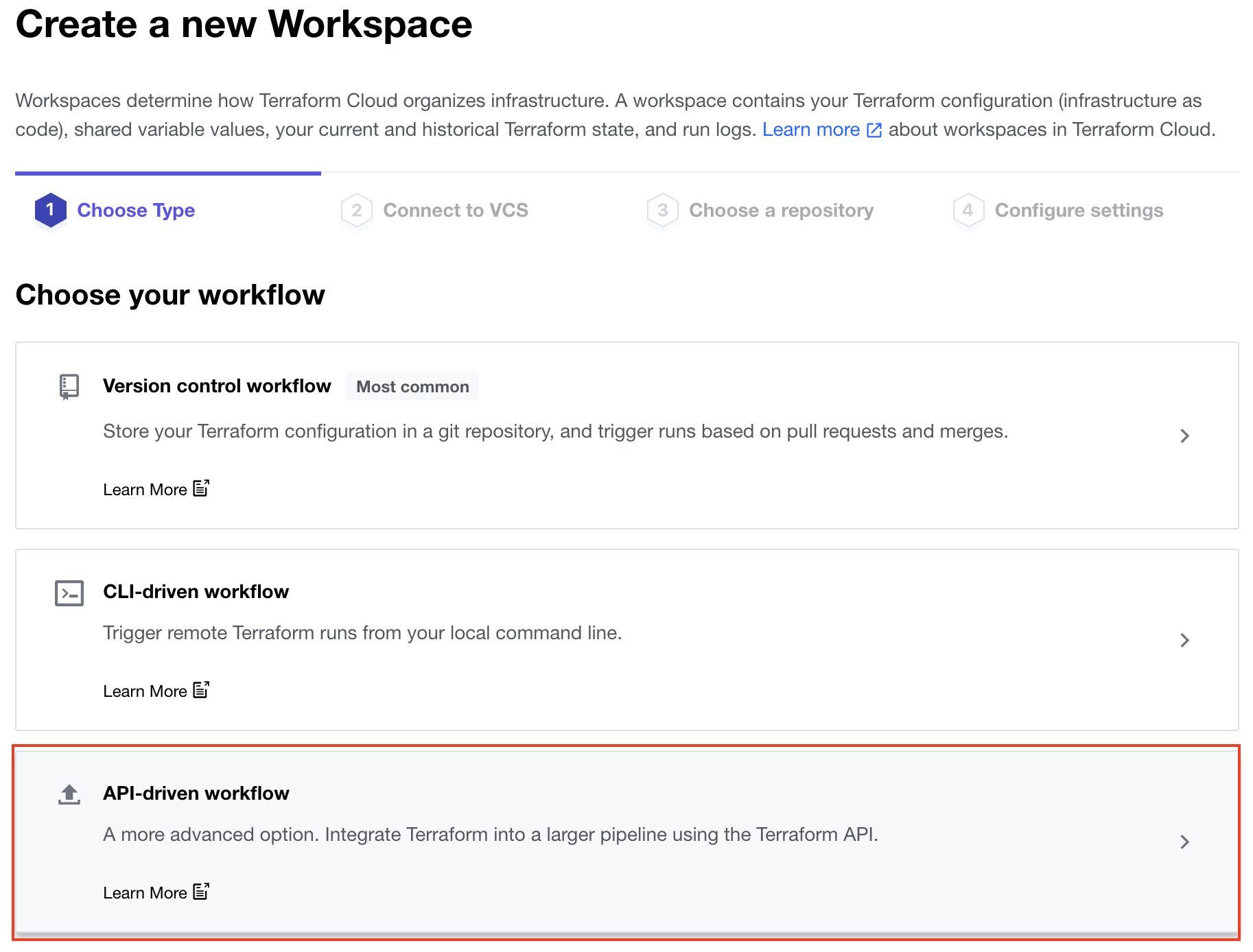

Create a new Workspace

From the Workspaces page click on the "+ New workspace" button near the top right of the page. On the first page, where it asks you to choose the type of workflow, select "API-driven workflow".

On the second page, where it asks for the "Workspace Name" enter gh-actions-demo and then click the "Create workspace" button at the bottom of the page.

Setup Environment Variables

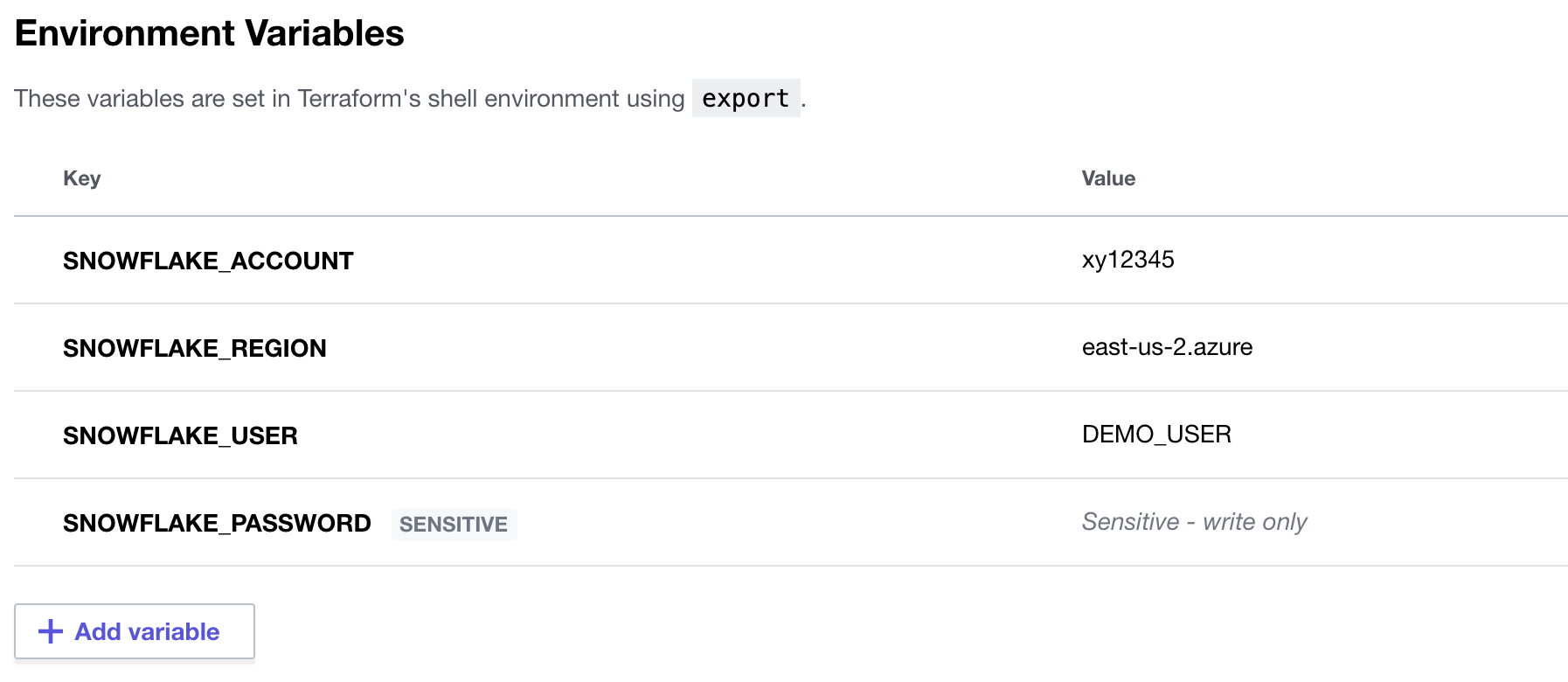

In order for Terraform Cloud to be able to connect to your Snowflake account you'll need to store the settings in Environment variables. Fortunately, Terraform Cloud makes this easy. From your new workspace homepage click on the "Variables" tab. Then for each variable listed below click on "+ Add variable" button (under the "Environment Variables" section) and enter the name given below along with the appropriate value (adjusting as appropriate).

Variable key | Variable value | Sensitive? |

SNOWFLAKE_ACCOUNT | xy12345 | No |

SNOWFLAKE_REGION | east-us-2.azure | No |

SNOWFLAKE_USER | DEMO_USER | No |

SNOWFLAKE_PASSWORD | ***** | Yes |

When you're finished adding all the secrets, the page should look like this:

Create an API Token

The final thing we need to do in Terraform Cloud is to create an API Token so that GitHub Actions can securely authenticate with Terraform Cloud. Click on your user icon near the top right of the screen and then click on "User settings". Then in the left navigation bar click on the user settings page click on the "Tokens" tab.

Click on the "Create an API token" button, give your token a "Description" (like GitHub Actions) and then click on the "Create API token" button. Pay careful attention on the next screen. You need to save the API token because once you click on the "Done" button the token will not be displayed again. Once you've saved the token, click the "Done" button.

Create Actions Secrets

Action Secrets in GitHub are used to securely store values/variables which will be used in your CI/CD pipelines. In this step we will create a secret to store the API token to Terraform Cloud.

From the repository, click on the "Settings" tab near the top of the page. From the Settings page, click on the "Secrets" tab in the left hand navigation. The "Actions" secrets should be selected.

Click on the "New repository secret" button near the top right of the page. For the secret "Name" enter TF_API_TOKEN and for the "Value" enter the API token value you saved from the previous step.

Action Workflows

Action Workflows represent automated pipelines, which inludes both build and release pipelines. They are defined as YAML files and stored in your repository in a directory called .github/workflows. In this step we will create a deployment workflow which will run Terraform and deploy changes to our Snowflake account.

- From the repository, click on the "Actions" tab near the top middle of the page.

- Click on the "set up a workflow yourself ->" link (if you already have a workflow defined click on the "new workflow" button and then the "set up a workflow yourself ->" link)

- On the new workflow page

- Name the workflow

snowflake-terraform-demo.yml - In the "Edit new file" box, replace the contents with the the following:

- Name the workflow

name: "Snowflake Terraform Demo Workflow"

on:

push:

branches:

- main

jobs:

snowflake-terraform-demo:

name: "Snowflake Terraform Demo Job"

runs-on: ubuntu-latest

steps:

- name: Checkout

uses: actions/checkout@v2

- name: Setup Terraform

uses: hashicorp/setup-terraform@v1

with:

cli_config_credentials_token: ${{ secrets.TF_API_TOKEN }}

- name: Terraform Format

id: fmt

run: terraform fmt -check

- name: Terraform Init

id: init

run: terraform init

- name: Terraform Validate

id: validate

run: terraform validate -no-color

- name: Terraform Apply

id: apply

run: terraform apply -auto-approve

Finally, click on the green "Start commit" button near the top right of the page and then click on the green "Commit new file" in the pop up window (you can leave the default comments and commit settings). You'll now be taken to the workflow folder in your repository.

A few things to point out from the YAML pipeline definition:

- The

on:definition configures the pipeline to automatically run when a change is pushed on themainbranch of the repository. So any change committed in a different branch will not automatically trigger the workflow to run. - Please note that if you are re-using an existing GitHub repository it might retain the old

masterbranch naming. If so, please update the YAML above (see theon:section). - We're using the default GitHub-hosted Linux agent to execute the pipeline.

Open up your cloned GitHub repository in your favorite IDE and create a new file in the root named main.tf with the following contents. Please be sure to replace the organization name with your Terraform Cloud organization name.

terraform {

required_providers {

snowflake = {

source = "chanzuckerberg/snowflake"

version = "0.25.17"

}

}

backend "remote" {

organization = "my-organization-name"

workspaces {

name = "gh-actions-demo"

}

}

}

provider "snowflake" {

}

resource "snowflake_database" "demo_db" {

name = "DEMO_DB"

comment = "Database for Snowflake Terraform demo"

}

Then commit the new script and push the changes to your GitHub repository. By pushing this commit to our GitHub repository the new workflow we created in the previous step will run automatically.

By now your first database migration should have been successfully deployed to Snowflake, and you should now have a DEMO_DB database available. There a few different places that you should check to confirm that everything deployed successfully, or to help you debug in the event that an error happened.

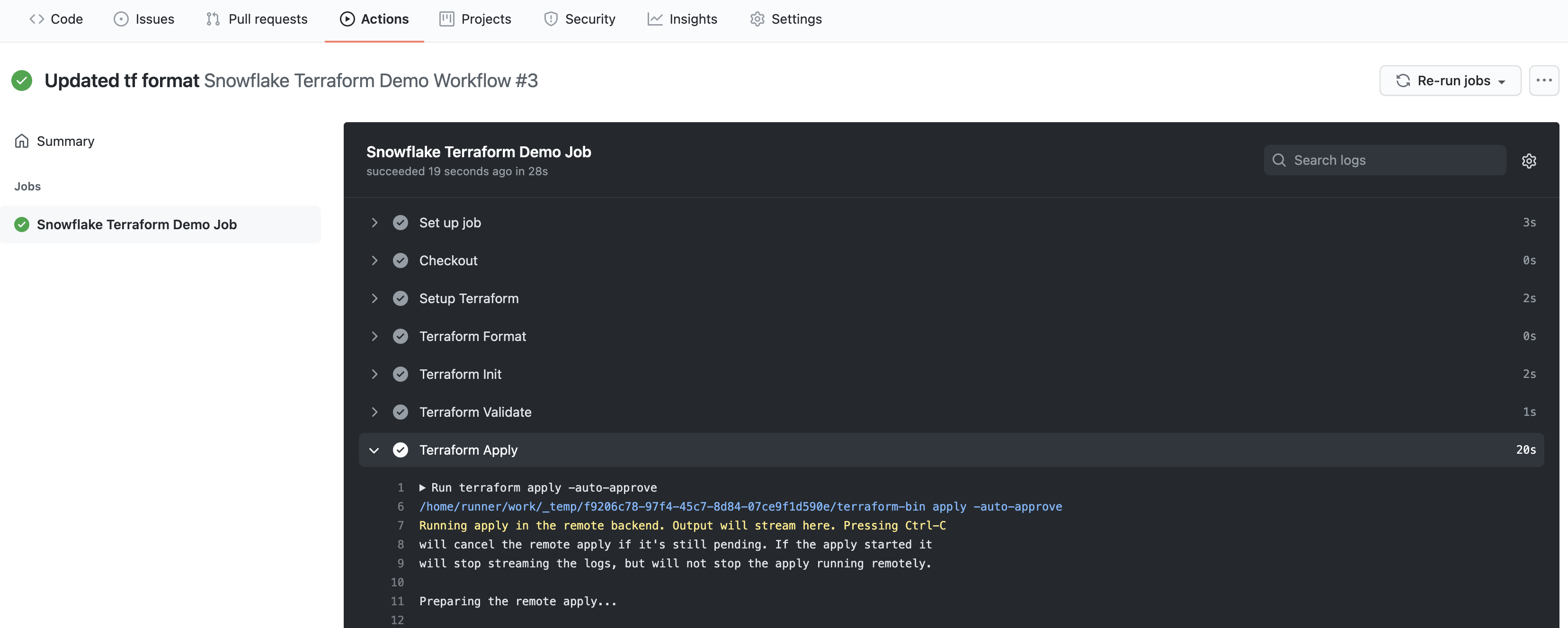

GitHub Actions Log

From your repository in GitHub, click on the "Actions" tab. If everything went well, you should see a successful workflow run listed. But either way you should see the run listed under the "All workflows". To see details about the run click on the run name. From the run overview page you can further click on the job name (it should be Snowflake Terraform Demo Job) in the left hand navigation bar or on the node in the yaml file viewer. Here you can browse through the output from the various steps. In particular you might want to review the output from the Terraform Apply step.

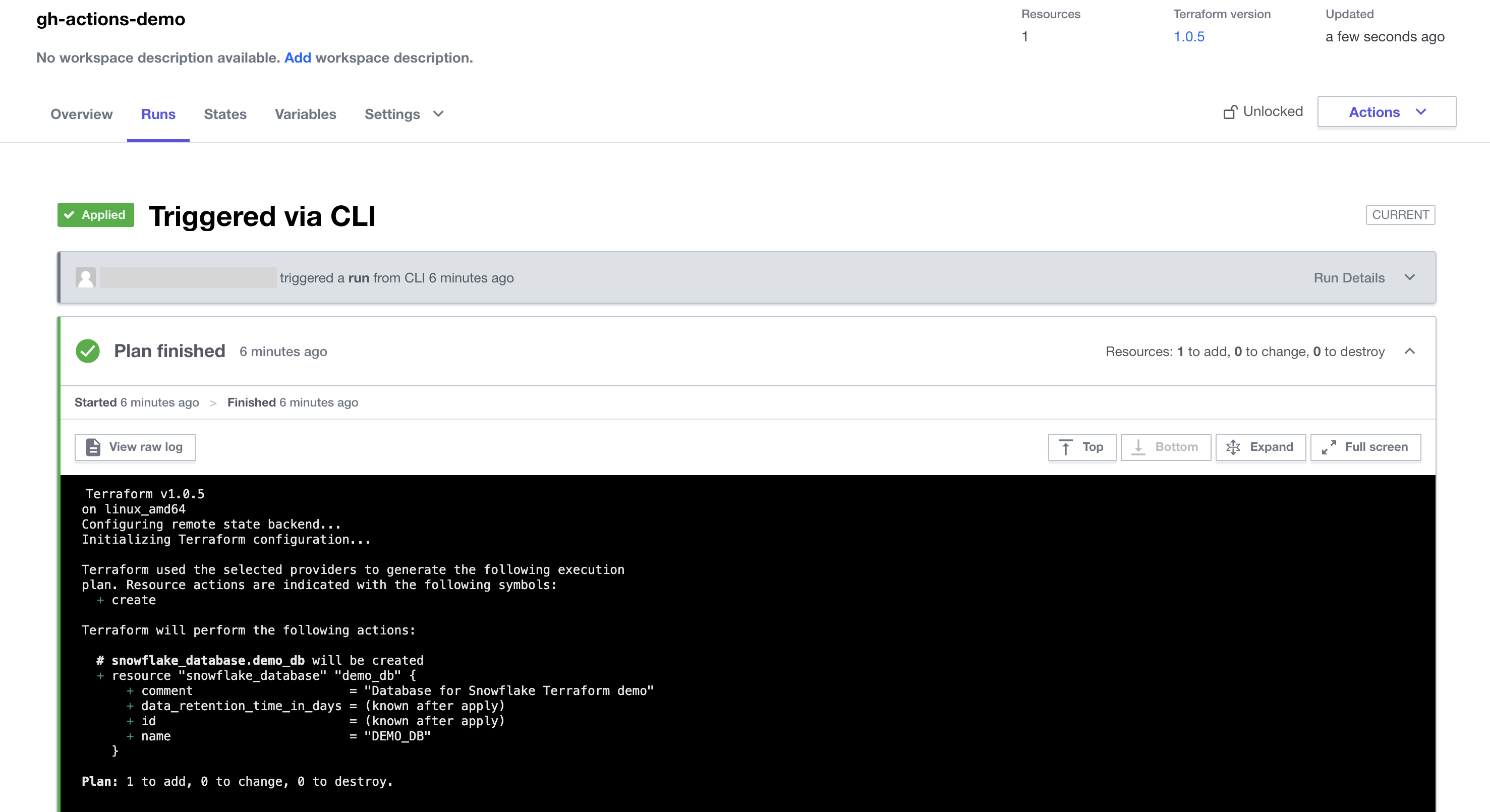

Terraform Cloud Log

While you'll generally be able to see all the Terraform output in the GitHub Actions logs, you may need to also view the logs on Terraform Cloud. From your Terraform Cloud Workspace, click on the "Runs" tab. Here you will see each run listed out, and for the purposes of this quickstart, each run here corresponds to a run in GitHub Actions. Click on the run to open it and view the output from the various steps.

Snowflake Objects

Log in to your Snowflake account and you should see your new DEMO_DB database! Additionaly you can review the queries that were executed by Terraform by clicking on the "History" tab at the top of the window.

Now that we've successfully deployed our first change to Snowflake, it's time to make a second one. This time we will add a schema to the DEMO_DB and have it deployed through our automated pipeline.

Open up your cloned repository in your favorite IDE and edit the main.tf file by appending the following lines to end of the file:

resource "snowflake_schema" "demo_schema" {

database = snowflake_database.demo_db.name

name = "DEMO_SCHEMA"

comment = "Schema for Snowflake Terraform demo"

}

Then commit the changes and push them to your GitHub repository. Because of the continuous integration trigger we created in the YAML definition, your workflow should have automatically started a new run. Toggle back to your GitHub and open up the "Actions" page. From there open up the most recent workflow run and view the logs. Go through the same steps you did in the previous section to confirm that the new DEMO_SCHEMA has been deployed successfully.

Congratulations, you now have a working CI/CD pipeline with Terraform and Snowflake!

In the previous sections we created and tested a simple GitHub Actions workflow with Terraform. This section provides a more advanced workflow that you can test out. This one adds the capability for having Terraform validate and plan a change before it's actually deployed. This pipeline adds CI triggers that cause it to run when a Pull Request (PR) is created/updated. During that process it will run a terraform plan and stick the results in the PR itself for easy review. Please give it a try!

name: "Snowflake Terraform Demo Workflow"

on:

push:

branches:

- main

pull_request:

jobs:

snowflake-terraform-demo:

name: "Snowflake Terraform Demo Job"

runs-on: ubuntu-latest

steps:

- name: Checkout

uses: actions/checkout@v2

- name: Setup Terraform

uses: hashicorp/setup-terraform@v1

with:

cli_config_credentials_token: ${{ secrets.TF_API_TOKEN }}

- name: Terraform Format

id: fmt

run: terraform fmt -check

- name: Terraform Init

id: init

run: terraform init

- name: Terraform Validate

id: validate

run: terraform validate -no-color

- name: Terraform Plan

id: plan

if: github.event_name == 'pull_request'

run: terraform plan -no-color

continue-on-error: true

- uses: actions/github-script@0.9.0

if: github.event_name == 'pull_request'

env:

PLAN: "terraform\n${{ steps.plan.outputs.stdout }}"

with:

github-token: ${{ secrets.GITHUB_TOKEN }}

script: |

const output = `#### Terraform Format and Style 🖌\`${{ steps.fmt.outcome }}\`

#### Terraform Initialization ⚙️\`${{ steps.init.outcome }}\`

#### Terraform Validation 🤖\`${{ steps.validate.outcome }}\`

#### Terraform Plan 📖\`${{ steps.plan.outcome }}\`

<details><summary>Show Plan</summary>

\`\`\`\n

${process.env.PLAN}

\`\`\`

</details>

*Pusher: @${{ github.actor }}, Action: \`${{ github.event_name }}\`, Working Directory: \`${{ env.tf_actions_working_dir }}\`, Workflow: \`${{ github.workflow }}\`*`;

github.issues.createComment({

issue_number: context.issue.number,

owner: context.repo.owner,

repo: context.repo.repo,

body: output

})

- name: Terraform Plan Status

if: steps.plan.outcome == 'failure'

run: exit 1

- name: Terraform Apply

if: github.ref == 'refs/heads/main' && github.event_name == 'push'

run: terraform apply -auto-approve

This worklow was adapted from Automate Terraform with GitHub Actions.

So now that you've got your first Snowflake CI/CD pipeline set up, what's next? The software development life cycle, including CI/CD pipelines, gets much more complicated in the real-world. Best practices include pushing changes through a series of environments, adopting a branching strategy, and incorporating a comprehensive testing strategy, to name a few.

Pipeline Stages

In the real-world you will have multiple stages in your build and release pipelines. A simple, helpful way to think about stages in a deployment pipeline is to think about them as environments, such as dev, test, and prod. Your GitHub Actions workflow can be extended to include a stage for each of your environments. For more details around how to work with environments, please refer to Environments in GitHub.

Branching Strategy

Branching strategies can be complex, but there are a few popular ones out there that can help get you started. To begin with I would recommend keeping it simple with GitHub flow (and see also an explanation of GitHub flow by Scott Chacon in 2011). Another simple framework to consider is GitLab flow.

Testing Strategy

Testing is an important part of any software development process, and is absolutely critical when it comes to automated software delivery. But testing for databases and data pipelines is complicated and there are many approaches, frameworks, and tools out there. In my opinion, the simplest way to get started testing data pipelines is with dbt and the dbt Test features. Another popular Python-based testing tool to consider is Great Expectations.

With that you should now have a working CI/CD pipeline in GitHub Actions and some helpful ideas for next steps on your DevOps journey with Snowflake. Good luck!

What We've Covered

- A brief history and overview of GitHub Actions

- A brief history and overview of Terraform and Terraform Cloud

- How database change management tools like Terraform work

- How a simple release pipeline works

- How to create CI/CD pipelines in GitHub Actions

- Ideas for more advanced CI/CD pipelines with stages

- How to get started with branching strategies

- How to get started with testing strategies